Jinjuan Feng, Subrata Acharya, Rhonda Greenhaw, Ziying Tang, Jin Guo, Sheng Miao, Claire Holmes

Department of Computer and Information Sciences, Hussman Center for Adults with Autism

Towson University, Towson MD

ABSTRACT

Oftentimes individuals on the Autism spectrum need substantial amounts of prompts to help them learn new skills, maintain acquired skills, stay focused, and resolve sensory-based challenges. To this effect, this research aims to develop an assistive application that provides scalable prompts based on contextual information such as location, time, user status, and user preference. A user-centered design approach is adopted to ensure that the design meets the special needs of the target population and their caregivers. Initial evaluation of a proof-of-concept prototype has been conducted and the result is promising.

INTRODUCTION

Individuals on the Autism spectrum can face significant challenges in terms of learning every day skills. They may require significant time to acquire a skill to fluency and often have difficulty maintaining the skill. Another major challenge for some individuals with Autism Spectrum Disorder (ASD) is executive functioning skills such as time management. They may also have sensory-based challenges that can provide significant distraction or produce negative sensory experiences. During these periods, they may require significant levels of support for even basic activities. Therefore, many individuals with ASD need constant prompts and visual support to assist their skill development and acquisition, as well as skill maintenance and generalization. Some individuals may also need a continuous level of prompts to help them stay focused when completing even familiar tasks. Having a scalable support that could provide information about the environment and guidance on current tasks could be incredibly helpful to individuals with ASD to increase independence.

These issues motivated us to develop a portable context aware assistive application that takes advantage of a variety of contextual information (e.g., location, time, personal schedule, user capability and preference) to provide intelligent support for individuals with ASD in their everyday life. The prompts could assist users to acquire, maintain, and generalize new skills. The prompts could also help users when they experience executive functioning, motor planning, and sensory processing challenges. In this paper, we report the design, implementation, and initial evaluation of a prototype of such an assistive application.

RELATED RESEARCH

Some individuals with ASD experience difficulty in learning every day skills. They may require substantially longer time to acquire a skill to fluency, especially for those that involve complex, multi-step tasks (Jensen and Spannagel, 2011). Once a skill has been acquired, they often have difficulty maintaining the skill or generalizing the skill in other environments (Palmen and Didden, 2012). Therefore, they may need constant prompts and visual support to assist their skill development and acquisition, as well as skill maintenance and generalization.

Individuals with ASD can also have difficulty with executive functioning skills such as time management (Landa and Goldberg, 2005). These executive functioning challenges can also take the form of motor planning challenges, which make it more difficult for the individual to plan out how to move their body (Hughes, 1996). In those cases, they need immediately accessible supports that can help them move through the task and relieve them of motor planning (e.g., step-by-step guide to go through the process). In addition, individuals with ASD have sensory-based challenges that may result in debilitation. During these periods, they may require significant levels of support for even basic activities (Jensen and Spannagel, 2011; Tseng, 2011).

Limited research has investigated the idea of using context information to support people with cognitive disabilities. Most of the published work is in the conceptual, exploratory stage without actual implementation or user involvement (e.g., Nehmer et al. 2006; Mileo et al. 2008). Mihailidis and Fernie (2002) described a context aware device that used a camera to assist people with dementia in independent living tasks (e.g., using the bathroom). Chang et al. (2009) used location-based information to provide prompts to individuals with cognitive disabilities for catering tasks. Both studies only explored one type of context information. Bodine et al. (2011) and Melonis et al. (2012) examined a much broader range of context information (location and motion information collected through sensors, visual information collected through cameras) for the purpose of providing automated prompting for people with cognitive disabilities. However, their application only focused on workplace tasks and the use of cameras raises privacy concerns in the independent living environment. None of the reported work has implemented or evaluated haptic interaction or customizable interfaces.

Thus, this research aims to develop a context aware assistive application that helps individuals with ASD in their everyday life. We adopt a user centered design and development approach and individuals with ASD as well as their caregivers have been closely involved in every stage of the application development.

OBSERVATION SESSIONS

In order to fully understand the challenges that people with ASD and their caregivers experience in everyday tasks, we completed ten observation sessions of people with ASD conducting daily tasks in diverse domains. During all the sessions, a group of students with ASD were supervised and guided by their mentors. We initially focused on the following domains:

- food preparation and service in a food catering business in a life skill training class

- handling money in a life skill training class in a retail setting

- book and document sorting and organization in a library setting

In addition to the above observation sessions, we also interviewed approximately ten caregivers and mentors of people with ASD, and two experts in the area to identify the major challenges and the existing approaches to address those challenges.

SYSTEM REQUIREMENTS

Based on comprehensive information collected during the observation sessions and the interviews, we specified the following system requirements that the assistive application has to meet:

- The application has to be easy to learn and use,

- The cost of the application has to be affordable,

- The level of prompts supported should be easily scalable to adapt to users with varied capabilities,

- The application should provide multiple interaction channels to accommodate unique communication needs,

- The application should support easy and smooth interaction between users with ASD and their care providers,

- The application should provide a customizable interface to accommodate diverse user preferences and capabilities.

SYSTEM ARCHITECTURE

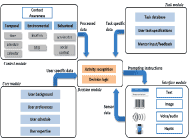

The application is developed on the Android platform. The system comprises of five basic components, namely the context module, the task module, the user module, the interface module, and the decision module. The interaction amongst these modules enables and contributes towards the efficiency and precision of the application (figure 1).

Context Module

The context module is responsible for collecting and utilizing the context information to infer user’s current state. This module supports the collection and query of temporal, environmental, and behavioral information and sends the data to the decision module to determine appropriate promptings. Mobile devices such as Android smart phones are used both as a tool to collect context information and as the prompting interface since they are easy to carry and are equipped with built in sensors and communicating modes such as text-to-speech functionalities and camera options. We employ temporal and environmental parameters to identify useful context information. For example, tri-axial wireless accelerometers can collect useful cues regarding user status and behaviors (e.g., seated or walking). Infrared sensors are used to track and identify location. Environmental sound is collected and analyzed to provide cues about both physical and social environment (e.g., a quite home or a noisy room). In addition, information such as schedule and user preferences from the user module are also utilized as additional features for intelligent prompting.

Task Module

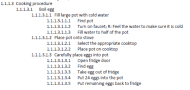

Every user task (e.g., cooking, getting dressed, doing laundry) is represented as a task module. The application database constitutes of numerous task modules for the user (mentee) to select from based on his current needs. The caregiver (mentor) could also invoke and pre-select a group of tasks as per the user requirement for any given time period (day/week/month/etc.). The system provides flexibility for both users and caregivers to modify the task group based on the appropriate user specification and user proficiency in the tasks. Each task constitutes of a sequence of sub-tasks with different levels of granularity to accommodate users with different capabilities and preferences. Figure 2 shows a partial task definition for preparing egg salad with 4 levels of granularity. When preparing egg salad, the high functioning users may only need higher level prompts such as boil egg, prepare green onion, etc. (as shown at the 2nd level in figure 2); whereas the low functioning users may need detailed prompts such as find a pot, turn on the faucet, etc. (as shown at the lowest level in figure 2).

User Module

To achieve the goal of a highly customizable design, the system enables individuals with ASD and their caregivers to incorporate relevant user information in the design. The user information includes (but not limited to) schedule, individual preferences (e.g. food, music), educational background, skills and capabilities (e.g., language, technology usage), past performance data (e.g., bottlenecks and difficulty for any specific task), and caregiver experience in regards to the user (e.g., notes and instructions developed by the mentor). Each real-time activity is constantly logged and analyzed by the system to provide a feedback mechanism to further fine-tune the user module in order to address appropriate user requirements.

Interface Module

The application interface must address the diversity in the target population by offering various forms of feedback and a highly customizable interface. Because individuals with ASD have unique communication needs, the prompting information will be provided through multiple devices in various forms, namely text, audio, image, video, and haptic feedback. The application will also allow the user to easily customize the interface to present the information in their preferred format (e.g., color mapping, font size, output). The application will also allow users to easily customize the level of desired prompts (e.g., sequential step-by-step instructions or instructions only for complicated tasks). The application will also allow caregivers to customize the interface to provide the type of support appropriate for each user.

Decision Module

The decision module works as a central processing unit that accepts data from the other four modules and provides real-time prompts to the interface module. The decision module mainly consists of two processes: activity recognition and decision logic. Activity recognition collects user specific data, task specific data, processed data and sensor data from the other modules. Decision logic aids to provide prompts to interface module after analyzing and matching the above input information.

We are using a combination of machine learning techniques to build this component, including Naïve Bayes, Support Vector Machine (SVM), and Fuzzy Logic (Das et al. 2012, Mihailidis and Fernie 2002, Intille et al. 2004). Both linear and non-linear task prompting approaches are investigated (Bodine 2011). Initially, the module includes semi-automatic decision inputs by caregivers (mentee) to help in specific user activity. After learning/training from previous user activity, the system will operate in an automatic mode to aid in everyday user activity. We are currently comparing various techniques to explore the suitable approach in the specific setting of our project in terms of both prediction accuracy and computational cost for the application.

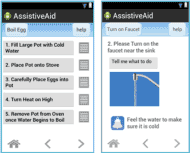

USER INTERFACE

In order to provide scalable prompts for users with different capabilities, the interface must support smooth navigation between different task modules and different levels of granularity. Figure 3 shows two sample interfaces for two levels of prompts. The interface on the left corresponds to the third level in Figure 2, explaining the major steps to boil eggs. The user can click the button next to each step to find more details about that step. The interface on the right shows the bottom level page with the most detailed content. This page explains the sub-step ‘Turn on faucet’ (1.1.1.3.1.1.2 in Figure 2). The prompt is delivered thorough three output channels: text instruction, audio instruction (either automatically played or played when the user clicks on the ‘Tell me what to do’ button), and an image. The bottom level page may also contain warnings, tips, and reminders. Warnings include information about potentially dangerous situation that needs caution (e.g., hot surface); tips provide useful information such as the location of a specific utensil; reminders notify users about situations that may induce sensory-based challenges (e.g., the noise of an oven fan).

INITIAL EVALUATION

We conducted a user study to evaluate the initial proof-of-concept prototype. Three high school students with ASD participated in the study. One is high functioning, the second is low functioning, and the other is in between. During the study, we demonstrated the application prototype to the participants, then the participants navigated through the cooking task modules. The feedback from the participants is highly positive. All three participants stated that the application would be very helpful in their daily routine. They commended multiple features of the application, such as the scalable level of prompts, multiple output channels, and the smooth navigation design. All participants expressed interest in using the fully-functional application in the next stage of the project development.

CONCLUSION

This research aims to develop a portable context aware assistive application to help individuals on the Autism spectrum live a more independent life. We believe that this solution has the potential to alleviate the workload of the caregivers of people with ASD. It will be particularly helpful when the caregivers are not available. We are currently working on the following tasks:

- implementing and testing the decision module,

- testing and fine-tuning the context and the interface modules,

- collecting and developing materials for the task and the user modules

- preparing to conduct the first comprehensive user evaluation in early spring 2014.

REFERENCES

Bodine, C., McGrew, G., Melonis, M., Heyn, P., Struemph, T., Elfner, S., Goldberg, A., Philips, G., and Lanning, M.(2011). Context-Aware Prompting System (CAPS) for persons with cognitive disabilities: A feasibility pilot study. Poster presentation at the 11th Annual Coleman Institute Conference.

Chang, Y., Chang, W., and Wang, T. (2009). Context-aware prompting to transition autonomously through vocational tasks for individuals with cognitive impairments. Proceedings of ACM ASSETS 2009. 19-26.

Das, B., Seelye, A., Thomas, B., Cook, D., Holder, L., and Schmitter-Edgecombe, M. (2012). Using smart phones for context-aware prompting in smart environments. Proceedings of CCNC 2012. 399-403.

Hughes, C. (1996). Brief report: Planning problems in Autism at the level of motor control. Journal of Autism and Developmental Disorders, 26, 99–106.

Intille, S., Bao, L., Tapia, E., and Rondoni, J. (2004). Acquiring in situ training data for context-aware ubiquitous computing applications. Proceedings of the SIGCHI conference on Human factors in computing systems, 1-8.

Jensen, V., and Spannagel, S. (2011). The spectrum of Autism spectrum disorder: A spectrum of needs, services, and challenges. Journal of Contemporary Psychotherapy, 41(1), 1-9.

Landa, R.J. and Goldberg, M.C. (2005). Language, social and executive functions in high functioning Autism: A continuum of performance. Journal of Autism and Developmental Disorders, 35, 557–573.

Melonis,M., Mihailidis, A., Keyfitz, R.,Grzes, M., Hoey, J., and Bodine, C. (2012). Empowering adults with cognitive disability through inclusion of non-linear context aware prompting technology (N-CAPS) Proceedings of RESNA 2012.

Mihailidis, A. and Fernie, G. (2002). Context-aware assistive devices for older adults with dementia. Gerontechnology, 2(2),173-188.

Mileo, A., Merico, D., and Bisiani, R. (2008). Wireless sensor networks supporting context-aware reasoning in assisted living. Proceedings of ACM PETRA’08.

Nehmer, J., Karshmer, A., Becker, M., and Lamm, R. (2006). Living assistive systems – An ambient intelligence approach. Proceedings of ICSE 2006. 43- 50.

Palmen, A., and Didden, R., (2012). Task engagement in young adults with high-functioning Autism spectrum disorders: Generalization effects of behavioral skills training. Research in Autism Spectrum Disorders, 6, 1377-1388.

Tseng, M., Fu, C., Cermak, S. A., Lu, L., and Shieh, J. (2011). Emotional and behavioral problems in preschool children with Autism: Relationship with sensory processing dysfunction. Research in Autism Spectrum Disorders, 51, 441-1450.

ACKNOWLEDGEMENT

We would like to thank the School of Emerging Technologies at Towson University for funding our research project.

Audio Version PDF Version