Roger O. Smith, PhD, OT, FAOTA, RESNA Fellow1

Jaclyn K. Schwartz, MS, OTR/L1

Sheikh Iqbal Ahamed, PhD2

1 University of Wisconsin – Milwaukee

2 Marquette University

Introduction

People with disabilities frequently experience accessibility problems in the community despite federal, state, and city laws and regulations that mandate accessible features (McClain, 2000; U.S. Architectural and Transporation Barriers Compliance Board, 1990). Consequently, people with disabilities continue to be hindered in their attempts to access community spaces and are unable to equitably participate in activities they need and want to do (Harris Interactive, 2010).

Objective

New interventions using innovative strategies are needed to help people with disabilities overcome environmental barriers. Therefore, the Access Ratings for Buildings (AR-B) R&D team has been working to fill this gap by developing an app suite as an information technology resource leveraging expert and consumer crowd sourcing concepts. The goal of this proceeding is to update and discuss the late stage developments of this technology enhanced intervention.

PRoduct

The AR-B suite of apps uses smartphone and integrated web technology to provide users with individualized accessibility information, allowing them anticipate and problem solve expected barriers. For example, a wheelchair user could use the apps to find out that the new restaurant where he wants to try dining has two-steps to enter, and therefore he should bring his portable ramp. To provide users with this type of information, two applications were developed.

Access Tools

The "Access Tools" apps help trained raters objectively assess building and enter accessibility information into a cloud database. The building is measured by individual features for accessibility. The Access Tools app will have the ability to evaluate 11 different building features for common accessibility issues. Through this process, the database will know, for example, the clear width of doorways and if there are accessible entrances.

|

Doorways |

Restaurant Specific Features |

Elevators |

Restrooms |

Floor & Ground Surfaces |

Routes |

Handrails |

Signs |

Parking |

Stairways |

Ramps |

Tables and Chairs |

Persons interested in evaluating public building accessibility must watch a series of web tutorials and then pass a test on the components of a basic evaluation. Once a person has passed the test, he may enter accessibility information into the database for all to access. Anyone who is interested may complete the training program to become a trained building rater; however, we anticipate most evaluators to be rehabilitation professionals, or architects, engineers, and disability advocates with additional accessibility background.

Access Place

Access Place is the second app of the AR-B suite. Access Place enables consumers to find out the accessibility of a building by looking at data input by trained raters, read reviews of other individuals experiences, and upload their own experiences to share with other people with disabilities.

The Access Place app was built with three enhancements that positions this app beyond similarly available apps (e.g. AbleRoad™ Associates Inc., 2013). First, the Access Place app interfaces with the Google Places API to allow users to find real buildings, even with limited information. Second, the app allows users to create custom profiles. While other apps require users to place themselves in predefined categories, Access Place recognizes that people with disabilities are individuals and cannot be easily placed in labeled bins. The Access Place app allows users to describe their functional limitations using a series of 36 sliders. Using the information that users provide, the Access Place algorithm allows users to read reviews from similar consumers and gives anticipated accessibility ratings to users in advance. Finally, the Access Place app was designed with accessibility in mind. Designers implement universal design principals when creating the interface to ensure optimum usability by people with disabilities.

Methods

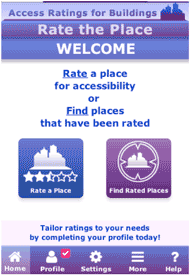

Figure 1. Sample Access Place App Interface

Figure 1. Sample Access Place App Interface The AR-B App Suite developers have been applying an overall user-centered design (Kangas & Kinnunen, 2005). Last year, the team described the initial stages of app development including conducting research, developing system requirements, and basic user interface design (Anson, Schwartz, & Smith, 2013; Edyburn, 2013; Schwartz, 2013). This proceeding will detail the more progress in the user interface in addition to the pilot testing and revisions stages.

User Interface

The designer created the interface using the principles of universal design, information on web/mobile accessibility, and xScope, a design software that allows disability simulations during the development process (the iconfactory & ARTIS Software, 2014).

Pilot Testing

Researchers have conducted two stages of pilot testing, internal and external. After each development round, team members would record all major errors. Once the applications are determined usable, external testing commences. External testing has been conducted with experts, including individuals with disabilities. During testing, participants are asked complete a series of activities using the apps. These include the evaluation of ramps in the Access Tools app and perusing and reviewing the Access Place app. Researchers have recorded, transcribed, and qualitatively analyzed the participant’s transactions. All external testing has been approved by the Internal Review Board at the University of Wisconsin-Milwaukee.

Revisions

After each round of testing, feedback and errors have been vetted by the team and entered into bug tracker software. The engineering team implements fixes and releases new versions for testing, resulting in an iterative process.

Results

User Interface

Through the review of the literature, universal design principals, and xScope software, the user interface has included several accessible design features including large san serif fonts, custom buttons with large surface area, increased distance between buttons, high contrast, and a blue & purple color palate.

Pilot Testing

Several themes emerged from the pilot testing transcripts. Frustration was often voiced by participants (with and without disabilities) when faced by software bugs and “glitches.” Participants who identified as building owner or service providers as well as individual’s with disabilities reported that the apps demonstrated appropriate content and that the suite would be helpful in their day-to-day lives. The participants also reported that the Access Tool app took too long to evaluate a building. Participants reported that they wanted to be able to evaluate a building in approximately 30 minutes. Many of the testers with disabilities recommended additional accessibility features such as better compatibility with voice over. Finally, participants made serious recommendations to improve the functionality and usability of the software, spanning more logical screen navigation structures to specific interface features.

Revisions

Currently the engineering team is working on implementing revisions. Building level evaluations are being shortened to accommodate trained raters while assuring all essential content remains. Figures 1 represents a sample of recent developments in the interface of the Access Place and Access Tools apps demonstrating a more logical lay out and enhanced accessibility features. Researchers anticipate another round of pilot testing followed by beta testing.

Discussion

Consumers found that the AR-B suite of apps to provide an innovative solution to the problem of poor community accessibility. While users liked the idea of using an app to relay information, they frequently had problems with the app implementation, particularly the interface components. All users had low tolerance for error, indicating that the app must have a very high level of performance before beta testing. Despite the researchers best attempts for accessible design, user testing with a people with a variety of disabilities was necessary to point out lurking inaccessible features. The AR-B project demonstrates that while mobile apps seem superficially simple, it can be very difficult to put a highly sophisticated underlying software infrastructure to an accessible and simple interface.

Conclusion

People with disabilities continue to lack access to public spaces due to environmental barriers. The AR-B suite of apps is a unique solution to a complex problem. The Access Tools app allows trained raters to collect detailed building level data. The Access Place app allows consumers to look up individualized accessibility information for buildings in their community. While the AR-B R&D team has successfully built the infrastructure of the system, user testing has identified several areas of improvement for the interface, accessibility, and usability components. Researchers are currently in the process of updating the app interface based on user feedback. While the app has made significant progress in terms of development, further research is needed on the effectiveness of this newly developed AR-B intervention.

References

AbleRoad™ Associates Inc. (2013). AbleRoad™ connects people with accessible places. Retrieved January 6, 2013, from https://ableroad.com/

Anson, D., Schwartz, J., & Smith, R. O. (2013, June 20- June 24). Environmental accessibility assessment: Alternative approaches for alternate users. Paper presented at the Rehabilitation Engineering and Assistive Technology Society of North America 2013 Conference, Bellevue, Washington, USA.

Edyburn, K., Schwartz, J., Smith, R.O. (2013). A case study: Development of Access Ratings for Buildings "Consumer" mobile app. Paper presented at the Rehabilitation Engineering and Assistive Technology Society of North America 2013 Conference, Bellevue, Washington, USA.

Harris Interactive. (2010). The ADA, 20 Years Later. New York: Kessler Foundation and National Organization on Disability.

McClain, L. (2000). Shopping Center Wheelchair Accessibility: Ongoing Advocacy to Implemnt the Americans with Disabilitites Act of 1990. Public Health Nursing, 17(3), 178-186.

Schwartz, J., O'Brien, C., Edyburn, K., Ahamed, S.I., Smith, R.O.,. (2013). Smartphone based solutions to measure the built environment and enable participation Paper presented at the Rehabilitation Engineering and Assistive Technology Society of North America 2013 Conference, Bellevue, Washington, USA.

the iconfactory, & ARTIS Software. (2014). XScope. Retrieved January 6, 2014, from http://xscopeapp.com/

U.S. Architectural and Transporation Barriers Compliance Board. (1990). Americans With Disabilities Act Accessibility Guidelines for Buildings and Facilities Pub.L. 101-336, 104 Stat. 327, enacted July 26, 1990 (Vol. 42 U.S.C. § 12101).

ACKNOWLEDGEMENTS

The AR-B Project is supported in part by the Department of Education, National Institute on Disability and Rehabilitation Research (NIDRR), grant number H133G100211. The opinions contained in this poster do not necessarily represent the policy of the U.S. Department of Education, and you should not assume endorsement by the Federal Government.

Audio Version PDF Version