EFFECTIVE COMPUTER ACCESS USING AN INTELLIGENT SCREEN READER

The goal of the intelligent screen reader project is to increase the effectiveness of screen readers in accessing information on the world wide web (WWW). The design and current status of the software are presented in this paper. Future work includes continuing development of the software and evaluating the software with visually impaired users.

BACKGROUND

Currently, only 46% of individuals of working age (21-64 years old) with visual impairments are employed [1]. A survey performed by Earl and Leventhal found that many screen reader users had difficulty performing tasks associated with web browsing, which is a critical computer skill [2]. Another study revealed that visually impaired computer users were significantly slower at performing several web-browsing tasks compared to able-bodied users [3]. Thus, a screen reader that provides effective information access will benefit visually impaired users in the domains of education and employment.

STATEMENT OF THE PROBLEM

There are many screen readers in today's market, such as JAWS for Windows, IBM's homepage reader, and Window-Eyes, and a lot of active research focus on making computer more accessible to people who are blind [4] [5] [6]. But none of the current products or research incorporates knowledge about what the user is actually doing. JAWS does provide a mechanism to adapt its behavior, called scripts. Unfortunately, the scripting language is very complex, so most users never take advantage of it. We want to develop software that (1) observes what the user is doing, (2) creates a script that can perform that task automatically in the future, and (3) tells the user when a script exists that is relevant to what the user is doing manually.

DESIGN

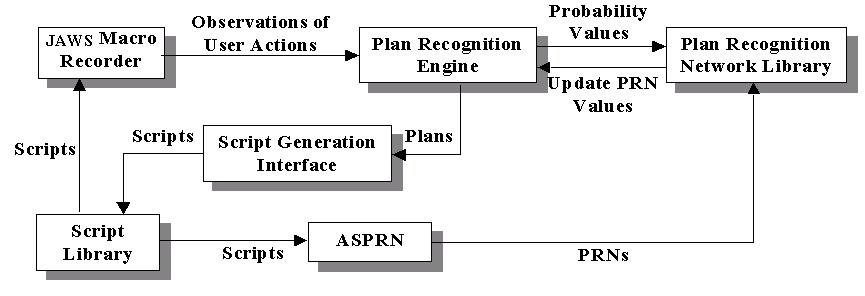

|

The components of the system are shown in Figure 1. The script library will be built to store scripts that perform common tasks. Two kinds of scripts are collected into the library. Small scripts perform basic task units such as following a link or looking for a keyword. These task units will be very useful in the script generation and dynamic plan recognition process. Large scripts in the library record higher-level tasks such as retrieving a weather forecast or finding the price of a stock.

The Script Generation Interface (SGI) is capable of generating a script from a list of users actions with or without intervention from the user. It takes a "raw" JAWS script file (created by a Macro Recorder built into JAWS) as input and outputs an optimized script. The SGI allows the user to manually modify and optimize the script line by line. It also provides automatic script generation using Plan Recognition Networks (PRNs), which can reduce a set of lines into a single equivalent function call. The user interface of the SGI is shown in Fig. 2.

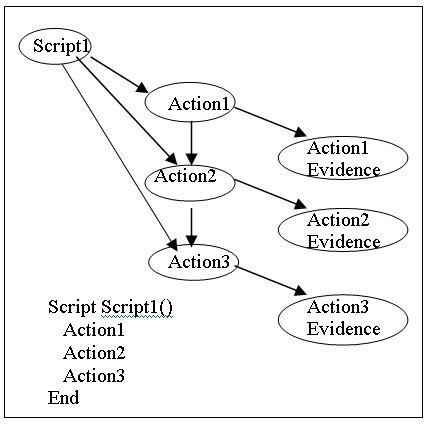

The Automated Synthesis of Plan Recognition Networks (ASPRN), developed by Intelligent Reasoning Systems, provides a powerful representation and reasoning scheme for plan recognition. It is a collection of theories and algorithms that takes a script representation as input and outputs a specially-constructed belief network (known as Plan Recognition Networks). An example of a simple script representation and its PRN is shown in Fig.3.

The Plan Recognition Engine uses PRNs in the PRN Library to predict the user's intention based on observations of the user's actions captured by JAWS. Once it finds a script that matches the observed actions with high probability, it will prompt the user that there is a script which closely matches his or her current activity. The user can then modify the existing script or use it unchanged. Scripts can be added into the script library for future use.

DEVELOPMENT

The following components have been developed to date.

Macro Recorder

A macro recorder that can record all the user's keystrokes as well as some events (such as waiting for a new window to be brought up) has been implemented by Freedom Scientific. The macros are saved as JAWS script files and playback can be triggered by an assigned hot key combination. The scripts generated by the macro recorder serve as the observations of user behavior used by the Plan Recognition Engine.

Script Generation Interface (SGI)

The SGI, developed by University of Pittsburgh, now has both features of manual and automatic script generation. For example (see Fig.2), suppose a user performs several Tab keys to a specific link, then presses the Enter key to follow the link, and then waits for the new web page to be displayed. The SGI realizes that the user is most likely performing a "follow-link" task and replaces the original subset with a single scriptcalled "Perform Script FollowLink( )".

FUTURE WORK

As indicated in the design and development sections, there is still much work to be done. Future work will include three main aspects. First, the dynamic plan recognition feature needs to be implemented in SGI. Second, the components of the system need to be fully integrated with each other. And finally, we would like to evaluate the developed software by carrying out user trials. The hypothesis of the testing is that users of the intelligent JAWS screen reader will take significantly shorter time than that of users of the regular JAWS screen reader in performing typical web browsing tasks.

|

|

DISCUSSION

To effectively access desired information in web browsing, it is not enough for us to improve the accessibility of a web page by adhering to established guidelines. It is also necessary for us to examine what the user is doing and to modify the screen reader so as to better achieve their goal.

REFERENCES

-

Watanabe-Tetsuya, Okada-Shinichi, Ifukube-Tohru. Development of a GUI screen reader for blind persons, Systems-and-Computers-in-Japan. 1998;29:18-27

ACKOWLEDGMENTS

This study is supported by a Phase II Small Business Innovation Research (SBIR) Grant from The National Science Foundation (Grant #91590).