A Robotic Guide for the Visually Impaired in Indoor Environments

ABSTRACT

An assisted navigation system for the visually impaired is presented. The system consists of a mobile robotic guide and small sensors embedded in the environment. Several aspects of assisted navigation are discussed that were observed in a pilot study with five visually impaired subjects.

KEYWORDS

Assisted navigation, visual impairment, robotic guides.

|

|

|

|

|

|

BACKGROUND

The 11.4 million visually impaired people in the United States continue to face a principal functional barrier: the inability to navigate. This inability denies them equal access to many private and public buildings, limits their use of public transportation, and makes the visually impaired a group with one of the highest unemployment rates (74 %). Thus, there is a clear need for systems that improve the wayfinding abilities of the visually impaired, especially in complex unfamiliar environments, e.g., airports, conference centers, and office spaces, where conventional aids, such as white canes and guide dogs, are of limited use. Several solutions have been proposed to address the navigation needs for the visually impaired in indoor environments. The Global Positioning System (GPS) is used in the GPS-Talk©, a system developed by the Sendero Group, LLC [5]. Infrared sensors are used in the Talking Signs© technology [2]. Radio Frequency Identification (RFID) sensors are used in an assisted navigation system for indoor environments developed at the Atlanta VA Research and Development Center [3]. Ultrasonic sensors were used in the NavBelt, an obstacle avoidance wearable device developed at the University of Michigan [4]. While the existing solutions have shown promise, they have three important limitations: 1) dependency on one sensor type; 2) limited communication capabilities; and 3) failure to reduce the user's navigation-related physical and mental fatigue.

RESEARCH QUESTION

The objective of this study was to create a proof-of-concept prototype of a robotic guide (RG) for the visually impaired in unfamiliar indoor environments. The stated objective checks the validity of our hypothesis that robot-assisted navigation has the potential to overcome the key limitations mentioned above. First, the RG relies on multiple sensor types, which makes navigation more robust. Second, users can interact with the RG via speech, wearable keyboard, and audio icons. Third, the amount of body gear carried by the user is significantly minimized, because everything resides on the robot. Since the RG knows the map of the environment, all navigation-related decisions are done by the robot, not the user. Finally, users do not have to abandon their conventional aids, e.g., white canes and guide dogs, to use the RG.

METHOD

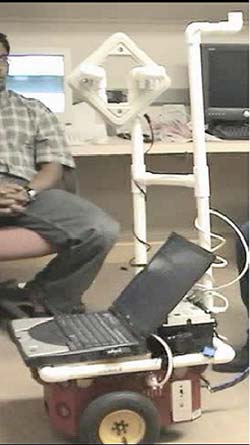

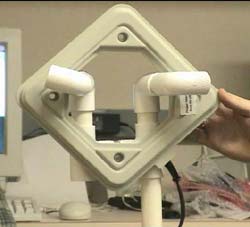

As shown in Figure 1.a (leftmost), the RG is built on top of the Pioneer 2DX commercial robotic platform from the ActivMedia Corporation. The platform has three wheels, 16 ultrasonic sonars, and is equipped with three rechargeable Power Sonic PS-1270 onboard batteries that can operate for up to 10 hours at a time. What turns the platform into a robotic guide is a wayfinding toolkit (WT) mounted on top of the platform and powered from the on-board batteries. The WT resides in a PCV pipe structure attached to the top of the platform. The toolkit includes a Dell Inspiron 8200 laptop connected to the platform's microcontroller through a USB to Serial cable. The laptop interfaces to an RFID reader (Figure 1.b) through another USB to Serial cable. The Texas Instruments (TI) Series 2000 RFID reader is connected to a square 200mm by 200mm RFID RI-ANT-GO2E antenna (Figure 1.c) that detects RFID tags placed in the environment. The arrow on the right side of Figure 1.d points to a TI RFID Slim Disk tag attached to a wall. These tags can be attached to any objects in the environment or worn on clothing. They do not require any external power source or direct line of sight to be detected by the RFID reader. A dog leash is attached to the battery bay handle at the back of the platform. Visually impaired individuals follow the RG by holding onto that leash (Figure 1.e).

The RG's software consists of two levels: control and topological. The control level is implemented with the following routines that run on the laptop: follow-wall, turn-left, turn-right, avoid-obstacles, go-thru-doorway, pass-doorway, and make-u-turn. These behaviors utilize the ideas of the potential fields approach [1]. The sonar readings are converted into attractive and repulsive vectors that are summed to decide where the robot should go next. The RG's reliable RFID-based localization allows it to overcome the problem of local minima that many potential fields approaches experience in the absence of reliable localization. The topological level is implemented in the Map Server and the Path Planner. The Map Server contains a topological map of the environment in which the RG operates represented as a graph of tags and behaviors. The Path Planner uses the standard breadth first search algorithm to find a path from one location to the other.

Visually impaired users interact with the RG through speech or a wearable keypad. Speech is received by the RG through a wireless microphone placed on the user's clothing. Speech is recognized and synthesized with Microsoft's Speech API (SAPI) 5.1 running on the laptop. The RG generates speech messages using SAPI's text-to-speech engine or audio icons, e.g., sounds of water bubbles. The messages are played in one headphone that the user can wear either on her ear or around her neck. To other people in the environment, the RG is personified as Merlin, a Microsoft software character, always present on the onboard laptop's screen (Figure 1.f).

RESULTS

|

The RG was evaluated with five visually impaired participants (no more than light perception) over a period of two months. Two participants were dog users and the other three used white canes. The participants were asked to use the RG to navigate to three distinct locations (an office, a lounge, and a bathroom) in an office area of approximately 50 square meters at the USU CS Department. All participants were new to the environment and navigated approximately 40 meters to get to all destinations. All five participants reached the assigned destinations.

DISCUSSION

The exit interviews showed that the participants really liked the system and thought it was a very useful aid in unfamiliar environments. They especially liked the idea that they did not have to abandon their white canes and guide dogs . Concerns were expressed about the RG's slow speed and movement jerkiness. The RG's speed was 0.5 m/s, which is below normal walking speeds of 1.2-1.5 m/s. The RG's navigation is currently based on sonars, which are sometimes unreliable due to specular reflection and cross talk. In addition, since the sonars' effective range is 2.5 meters, the robot's speed was adjusted so that it could stop in time to avoid bumping into people. The jerky movements occurred when the sonars either underestimated or overestimated the distances to the closest objects. Further work is planned to increase the speed and decrease movement jerkiness by integrating a laser range finder to the RG's wayfinding toolkit. The finder's effective range is 8-10 meters.

REFERENCES

- Arkin, R.C. (1998). Behavior-based robotics. Cambridge, MA: MIT Press.

- Marston, J.R., Golledge, R.G. (2000). Towards an accessible city: removing functional barriers for the blind and visually impaired: A case for auditory signs. Technical report, Department of Geography, University of California at Santa Barbara.

- Ross, D.A., Blasch, B.B. (2002). Development of a wearable computer orientation system. IEEE Personal and Ubiquitous Computing, 6, 49–63.

- Shoval, S., Borenstein, J., & Koren, Y. (1994). Mobile robot obstacle avoidance in a computerized travel for the blind. Proceedings of IEEE International Conference on Robotics and Automation, San Diego, CA, 1994.

- http://www.senderogroup.com/gpsflyer.htm . “What is GPS-Talk?” Sendero Group, LLC .

ACKNOWLEDGMENTS

The study was funded by a Community University Research Initiative (CURI) grant from the State of Utah.

Author Contact Information:

Vladimir Kulyukin, Ph.D.,

Department of Computer Science,

Utah State University,

4205 Old Main Hill,

Logan, UT 84322-4205,

Office Phone (435) 797-8163.

EMAIL: vladimir.kulyukin@usu.edu .