Seating and Mobility Script Concordance Test (SMSCT): External Structure Evidence

ABSTRACT

The Seating and Mobility Script Concordance Test (SMSCT); a performance-based measure intended for use with professionals that recommend seating and mobility devices to individuals with spinal cord injuries, is designed to assess clinicians by examining the organization of their knowledge, associations between items of their knowledge, and adequacy of their clinical decisions compared to expert consensus. 15 seating and mobility experts and 10 orthopedic experts were used to explore SMSCT external validity. This study investigates the hypothesis that SMSCT scores can differentiate two groups with differing backgrounds and experience. Results showed the SMSCT distinguished between intervention subscores for seating and mobility experts and orthopedic experts.

KEYWORDS

Clinical competence, rehabilitation, seating and mobility, educational measurement, validity evidence

BACKGROUND

At present, no validated measurement tests exist to evaluate the impact of educational experiences or clinical practice on the ability to make specialized clinical decisions about seating and mobility (SM) needs. The Seating and Mobility Script Concordance Test (SMSCT) is a tool designed to assess clinicians by examining the organization of their knowledge, associations between items of their knowledge, and adequacy of their clinical decisions compared to expert consensus. The SMSCT is modeled after the development of a similar Script Concordance Test in the field of medicine created by Charlin et al (1) . The SMSCT consists of 67 items divided into two subtests: 33 assessment items (49%) and 34 intervention items (51%) (2) in the content domain of SM for individuals with spinal cord injuries.

Determining the validity of the SMSCT is the most fundamental consideration in evaluating its usefulness as a measure of clinical expertise for clinicians who work with SCI. Internal structure evidence explores the technical quality of test items whereas external structure evidence validates how test scores are interpreted. Several phases were dedicated to the development and validation of the SMSCT (3;4) . To date construct validity of the SMSCT has been investigated (4) and internal structure evidence has been appraised (4) .

This paper describes the collection and appraisal of external structure evidence. We hypothesized that SMSCT subscores can differentiate between SM experts and Orthopedic (Ortho) experts.

METHODS

In order to obtain external validity evidence and compare groups of known qualities 15 experts in SM and 10 Ortho PT experts were recruited through invitation (Table 1). For the purpose of this work expert SM clinicians were defined as individuals with: a physical or occupational therapy license; a combination of SM service provision, which equates to full time work for 5 years, and completion of professional development to include a minimum of 10 contact hours/year for a minimum of 5 years in the area of SM. The Ortho experts were chosen in order to recruit a homogenous group that had expertise related to the musculoskeletal spine but not specific to SM service provision. Independent samples t-tests were used to determine if the SMSCT subscores (assessment and intervention) could differentiate between SM experts (n=15) and Ortho experts (n=10).

Variable |

SM Experts |

Ortho Experts |

|---|---|---|

Age |

47.9 (8.1) |

36.4 (5.4) |

Gender |

3 males |

4 males |

Years of clinical practice |

24.7 (8.2) |

9.2 (3.9) |

SM service provision |

20.6 (5.8) |

0.1 (0) |

Hours/week SM |

26.2 (12.9) |

0.0 (0) |

SCI/year |

72.4 (83.7) |

0.2 (0.0) |

Professional level degree |

11 entry level |

3 entry level |

| Abbreviations: SD, standard deviation; SCI, spinal cord injury; Ortho, orthopedic; SM, seating and mobility; SCI/yr, number of spinal cord injured patients treated per year; prof. level degree, professional level degree; adv, advanced. | ||

RESULTS

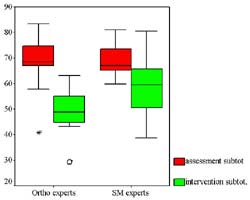

Results partially supported the hypothesis. No significance was found between groups for the assessment subtest ( t = -0.3, p = 0.77). However on the intervention subtest, subjects in the Ortho expert group (49.2 ± 9.2) scored lower than those in the SM expert group (58.6 ± 11.1). The mean difference was significant ( t = -2.2, p = 0.04). Figure 1 shows the distributions for both groups.

|

DISCUSSION

External structure evidence, measures the extent to which scores converge or diverge with known qualities in the manner expected and are critical to the use and interpretation of the test (5;6) . We hypothesized that scores of SM experts would be higher than Ortho experts. Merely because a clinician is an expert and, perhaps, knows a great deal about assessment and intervention, if their knowledge is not specific to SM for SCI, we would not expect their scores to be as high on the SMSCT.

Results of the analysis partially supported our hypothesis. No difference between groups on the assessment subtest was detected. Some may argue that assessment knowledge is not unique to expert group therefore resulting in the inability to demonstrate the hypothesized relationships between assessment subscores and expert groups. Perhaps assessment knowledge is common to different areas of therapy practice. Another possible explanation is that the actual assessment items may not be reflective of unique knowledge and skills specific to SM for SCI. A significant difference, however, was detected between groups for the intervention subtest suggesting that these items were capable of detecting differences in intervention knowledge therefore providing preliminary evidence that SMSCT intervention subscale scores are valid in this application.

Construct under representation refers to the degree to which a test fails to capture important aspects of the construct it purports to measure (5;6) . It implies a narrowed meaning of test scores because the test does not adequately sample the domain of interest. It is quite possible that the SMSCT-40 was not reflective of the variety of skills and knowledge specific to SM for SCI resulting in content underrepresentation. Test specification quite possibly may have not included the spectrum of clinical vignettes, hypotheses and common misconceptions representing the construct of interest therefore negatively impacting the results of this study.

Further work is required to improve the internal structure of the SMSCT. The revision and addition of items removed, due to poor functioning, will increase content coverage to correspond with the original test blueprint and defined test content (2) . Once satisfactory internal structure of the SMSCT is attained, exploration of additional external structure evidence can be pursued.

CONCLUSION

The SMSCT intervention subtest is able to differentiate between two groups with differing backgrounds and experience. Yet the SMSCT was unable to differentiate groups on the assessment subtest. This is important information to learn, and implies that there are several reasons these findings may have occurred. Some problems have been identified in the technical quality of SMSCT items indicating the need for additional revision and validation. Once satisfactory internal structure is attained further investigation of the ways test scores can be interpreted can be pursued. This study provides an important beginning towards validating a measure of clinical expertise in the area of SM for SCI. A greater understanding of expertise and different levels of practice will enable better professional preparation. Later links between different levels of practice and client outcomes may then be explored in terms of relating clinical effectiveness and the value of professional practice. Positive results being obtained on similar tests in the area of medicine are encouraging. Future work is planned to continue this line of research, as well as developing similar tests in different content areas.

ACKNOWLEDGEMENTS

This research is partially supported by the U.S. Department of Veterans Affairs, through the Center of Excellence for Wheelchairs and Related Technologies (F2181C) and the U.S. Dept. of Education through the University of Pittsburgh Model Center for Spinal Cord Injury (H133A011107).

CONTACT INFORMATION

Laura J. Cohen PT, PhD, ATP;

Human Engineering Research Laboratories

(151R-1),

VA Pittsburgh Healthcare System,

7180 Highland Drive,

Pittsburgh, PA 15206;

TEL: (412) 365-4850;

FAX: (412) 365-4858;

E-MAIL: ljcst22@pitt.edu

REFERENCE LIST

- Charlin B, Roy L, Brailovsky C et al. The Script Concordance test: a tool to assess the reflective clinician. Teaching and Learning in Medicine 2000; 12(4):189-95.

- Cohen LJ, Fitzgerald S, Lane Suzanne, Boninger M. Development of the Seating and Mobility Script Concordance Test (SMSCT) for spinal cord injury: Obtaining content validity evidence. Manuscript submitted for publication 2003.

- Simpson R, editor. Development and reliability testing of a clinical rationale measure of seating and wheeled mobility prescription. 2 A.D. Jun 27; RESNA Press, 2002.

- Cohen LJ, Fitzgerald S, Lane Suzanne, Boninger M. Validation of the Seating and Mobility Script Concordance Test (SMSCT). Manuscript submitted for publication 2003.

- American Education Research Association, American Psychological Association, National Council on Measurement in Education. Standards for educational and psychological testing. Washington, DC: American Educational Research Association, 1999.

- Nitko AJ. Educational assessment of students. 3 ed. Saddle River, NJ: Prentice-Hall, 2001.