29th Annual RESNA Conference Proceedings

A Robotic Shopping Assistant for the Blind

Vladimir Kulyukin Chaitanya Gharpure

Computer Science Assistive Technology Laboratory

Department of Computer Science

Utah State University

Logan, UT 83422-4205

ABSTRACT

The Computer Science Assistive Technology Laboratory (CSATL) of Utah State University (USU) is currently developing RoboCart, a robotic shopping assistant for the blind. This paper describes a small set of initial experiments with RoboCart at Lee's MarketPlace, a supermarket in Logan, Utah.

KEYWORDS

Visual impairment, robot-assisted navigation, robot-assisted grocery shopping

BACKGROUND

There are 11.4 million visually impaired individuals living in the U.S. [1]. Grocery shopping is an activity that presents a barrier to independence for many visually impaired people who either do not go grocery shopping at all or rely on sighted guides, e.g., friends, spouses, and partners. Traditional navigation aids, such as guide dogs and white canes, are not adequate in such dynamic and complex environments as modern supermarkets. These aids cannot help their users with macro-navigation, which requires topological knowledge of the environment. Nor can they assist with carrying useful payloads.

In summer 2004, the Computer Science Assistive Technology Laboratory (CSATL) of the Department of Computer Science (CS) of Utah State University (USU) launched a project whose objective is to build a robotic shopping assistant for the visually impaired. In our previous publications, we examined several technical aspects of robot-assisted navigation for the blind, such as RFID-based localization, greedy free space selection, and topological knowledge representation [2, 3, 4]. In this paper, we briefly describe our robotic shopping assistant for the blind, called RoboCart, and present a small set of initial experiments with RoboCart in Lee's MarketPlace, a supermarket in Logan, Utah.

HYPOTHESIS

It was hypothesized by the investigators that repeated use of RoboCart by a visually impaired shopper leads to the reduction in overall shopping time which eventually reaches asymptote.

METHOD

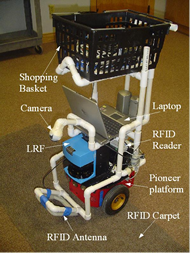

Figure 1: d RoboCart (Click image for larger view)

Figure 1: d RoboCart (Click image for larger view) RoboCart is built on top of a Pioneer 2DX robotic platform from ActivMedia Corporation. RoboCart's navigation system resides in a PVC pipes structure mounted on top of the platform (See Figure 1). The navigation system consists of a Dell TM Ultralight X300 laptop connected to the platform's microcontroller, a SICK laser range finder, a TI-Series 2000 RFID reader from Texas Instruments, and a Logitech camera facing vertically down. The RFID reader is attached to a 200mm x 200mm antenna, which is attached close to the floor, in front of the robot as seen in figure 1. The antenna reads the small RFID tags embedded under carpets placed at the beginning and end of grocery aisles. One such carpet is shown in Figure 2. The antenna is attached in the front, because the robot's metallic body and the magnets in its motors disabled the antenna when placed under the body of the robot.

Navigation in RoboCart is based on Kuipers' Spatial Semantic Hierarchy (SSH) [5]. The SSH is a model to represent spatial knowledge. In an SSH, spatial knowledge can be represented in five levels: sensory, control, causal, topological and metric. Sensory level is the interface to the robot's sensory system. The RoboCart's navigation is a combination of Markov localization that uses the laser range finder and RFID-based localization that uses RFID carpets. RoboCart has a topological map of the store that contains information on what product items are contained in what aisles. The shopper interacts with the cart by browsing a voice-based product directory with a 10-key keypad attached to the right of the handle. When a product item is selected RoboCart takes the shopper to an appropriate shelf.

A wireless IT2020 barcode reader from Hand Held Products Inc. is wirelessly coupled to the onboard laptop. When the shopper reaches the desired product in the aisle, he/she picks up the barcode and scans the barcode stickers on the edge of the shelf. When a barcode is scanned the barcode reader beeps. If the barcode scanned is that of the search item, the user hears a synthesized message in a Bluetooth headphone. Figure 3 shows a visually impaired user scanning a barcode on the shelf with a wireless barcode reader.

RESULTS

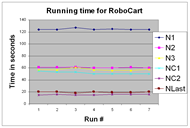

Figure 4: d Navigation Timings for RoboCart (Click image for larger view)

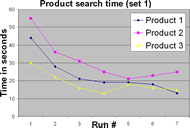

Preliminary experiments were run with one visually impaired shopper over the period of three days. A single shopping iteration consisted of the shopper picking up RoboCart from the docking area near the entrance, navigating to three pre-selected products, and navigating back to the docking area through the cash register. Each iteration was divided into 10 tasks: navigating from the docking area to product 1 (N1), finding product 1 (P1), navigating from product 1 to product 2 (N2), finding product 2 (P2), navigating from product 2 to product 3 (N3), finding product 3 (P3), navigating from product 3 to entry of cash register (NC1), unloading the products (UL), navigating from the cash register entry to the cashregister exit (NC2), and navigating from the cash register to the docking area (NLast). Before the experiments, the shopper was given 15 minutes of training on using the barcode reader to scan barcodes. Seven shopping runs were completed for three different sets of products. Within each set, one product was chosen from the top shelf, one from the third shelf and one from the bottom shelf. Time to completion numbers for each of the ten tasks were recorded by a human observer. It can be seen from the graph in Figure 4 that the time taken by the different navigation tasks remained fairly constant over all runs. The graph in Figure 5 shows that the time to find a product reduces after a few iterations. The initial longer time in finding the product is due the fact that the shopper is not aware of the exact location of the product. However, over time, the shopper learns where to look for the barcode for a specific product item, and the product search time reduces. For the shopper in the experiments, the product search time reached the asymptote at an average of 20 to 30 seconds.

DISCUSSION

Figure 5: d Product search timings (Click image for larger view)

Figure 5: d Product search timings (Click image for larger view)

This single subject study with gives the investigators hope that visually impaired shoppers can be trained to use a barcode reader in a relatively short period of time. The experiments conducted with one visually impaired shopper indicate that the overall shopping time reduces with the number of shopping iterations and eventually reaches asymptote.

REFERENCES

- LaPlante, M. P. & Carlson, D. (2000). Disability in the United States: Prevalence and Causes. Washington, DC: U.S. Department of Education.

- Kulyukin, V., Gharpure, C., De Graw, N., Nicholson, J., and Pavithran, S. (2004). A Robotic Wayfinding System for the Visually Impaired. In Proceedings of the Innovative Applications of Artificial Intelligence Conference (IAAI), pp. 864-869. AAAI, July 2004.

- Kulyukin, V., Gharpure, C., Nicholson, J., and S. Pavithran. (2004). RFID in Robot-Assisted Indoor Navigation for the Visually Impaired. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems (IROS). IEEE/RSJ, October 2004.

- Kulyukin, V., Gharpure, C., and Nicholson, J. (2005). RoboCart: Toward Robot-Assisted Navigation of Grocery Stores by the Visually Impaired. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE/RSJ, July 2005.

- Kupiers, B. (2000). The Spatial Semantic Hierarchy. Artificial Intelligence, 119:191-233.

ACKNOWLEDGMENTS

The study was funded, in part, by two Community University Research Initiative (CURI) grants from the State of Utah (2004-05 and 2005-06) and NSF Grant IIS-0346880. The authors would like to thank Sachin Pavithran, a visually impaired training and development specialist at the USU Center for Persons with Disabilities, for his feedback on the localization experiments.

Author Contact Information:

Vladimir Kulyukin, Ph.D.

Computer Science Assistive Technology Laboratory

Department of Computer Science

Utah State University

4205 Old Main Hill

Logan, UT 84322-4205

Office Phone (435) 797-8163

EMAIL: vladimir.kulyukin@usu.edu .

Chaitanya Gharpure

Computer Science Assistive Technology Laboratory

Department of Computer Science

Utah State University

4205 Old Main Hill

Logan, UT 84322-4205

Office Phone (435) 512-4560

EMAIL: cpg@cc.usu.edu .

This should be in the right column.