Md Osman Gani1, Sheikh Iqbal Ahamed1, Samantha Ann Davis2, Roger O. Smith2

1Ubicomp Lab, Marquette University, Milwaukee, WI,

2R2D2 Center, University of Wisconsin, Milwaukee, WI

Abstract

Human activity recognition is an interdisciplinary research area. It has importance across fields such as rehabilitation engineering, assistive technology, health outcomes, pervasive computing, machine learning, artificial intelligence, human computer interaction, medicine, social networking, and the social sciences. Activities are performed indoors and outdoors. We can classify these activities into two classes, simple full body motor activities such as walking, running, sitting and complex functional activities such as cooking, washing dishes, driving, watching TV, reading a book, etc. In this paper, we describe an approach to recognize complex activity using solely a smartphone with common sensors, our software and standard Wi-Fi infrastructure. We also describe our prototype data collection components as the foundation of this project.Introduction

In our everyday life, we perform countless activities. that occur indoors and outdoors for various purposes [1]. We can categorize these activities into two different classes. One class is of simple full body motor activity and the other class is complex functional activity. Simple activities include walking, running, sitting, lying, climbing upstairs or downstairs, jumping etc. Complex activities include cooking, washing dishes, driving, watching TV, reading a book, playing tennis, swimming etc. It is easy for humans to watch, detect, and differentiate one activity from another, but building an automatic system to accurately identify a particular activity from the array of human activity is extremely challenging.

Performing Activities of Daily Living (ADLs) and Instrumental Activities of Daily Living (IADLs) are an important part of living a healthy independent life [13], [14]. These activities cover a wide range, such as self-care, meal preparation, bill paying, and entertaining guests. Virtually every rehabilitation therapist and program focuses on these as outcome goals. The ability to perform ADLs and IADLs are important indicators both for those recovering from a newly acquired disability, or for those at risk for decline, either through chronic, physical or mental impairments (i.e., ALS, MS, Parkinson’s, Alzheimer’s), and may act as early indicators of disease or illness [15]. Disruptions in the routine of ADLs can be an indicator of either lack of rehabilitation success or significant decline in function, and act as an important indicator of a return to or decrease in the quality of life (QoL) [16], [17]. These disruptions in routine are often used as indications that help diagnose, treat, and document the outcomes of services for people with a wide variety of disabilities including psychological impairments, such as depression and dementia [18], [19], [20]. Additionally, older adults may perform activities despite decrements in functional capacity. However, a threshold of declined functional performance may be reached, at which time assistance may be necessary [21].

In recent years there has been a rapid growth in the number of smartphone users. Total shipments of smartphones in 2012 were 712.6 million units with an annual growth of 44.1% from 2011 [2]. Every smartphone comes with various wireless adapters and offers a variety of useful sensors such as Accelerometer, Gyroscope, Orientation sensor, Magnetometer, Barometer, GPS, Wi-Fi, Fingerprint and NFC. Use of the smartphone based system eliminates the cost of additional devices and sensors.

RELATED WORK

Extensive research has focused on automated machine recognition of human activity [1], [3], [4], [6], [7], [11]. Using computer vision has been one approach [3], [4], [5]. Computer vision proposes and implements automatic activity recognition of form activities performed by one or more persons from a sequence of images. This type of image/video activity recognition is one of the promising applications of computer vision. Other research has used wearable kinematic sensors like the accelerometer, the gyroscope and RFID by placing them on different parts of the body [11], [12].

Our use of technology leverages the increasingly ubiquitous smartphone. Compared to computer vision or kinematic sensors smartphones offer many advantages such as they do not require any additional infrastructure, they are unobtrusive, and have good (and rapidly expanding) computation power and communication power [9], [10], [11], [12]. Most of the approaches using smartphones have tried to recognize simple activities such as walking, running, standing, walking up stairs, walking down stairs, sitting and climbing. A few researchers have begun to tackle the recognition of more complex functional activities. The platforms used by researchers include Android, iOS, Windows, Symbian and Blackberry.

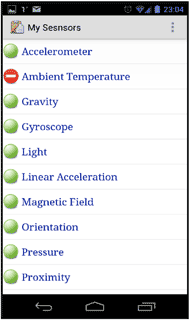

Figure 4: Main window shows list of available sensor with green icon and unavailable sensor with red icon.

Figure 4: Main window shows list of available sensor with green icon and unavailable sensor with red icon. Importantly, the literature has examined the use of time and location data to predict activity. Hagerstrand was early to propose that human activities were constrained not only by location, but also by time, which he called “time geography” [22]. He also recognized individual differences and emphasized the importance of the individual as the unit of study in human activity [23]. As humans we are creatures of habit and tend to follow the similar routines based on various cycles. Such cycles include circadian rhythms, weekly schedules, seasonal events and annual holidays. Prediction of what a person is doing based on their individual time schedules is quite plausible when both location and time are known. The better granularity of the time/activity linked data increases the confidence level of the deduction. This method of location and time based activity deduction is currently used in such diverse fields as environmental health [24], wildlife monitoring [25], and traffic systems analysis [26].

As mentioned, the use of motion related sensors, such as accelerometers and gyroscopes have also been widely used in activity recognition systems as a wearable sensor. Both time and motion data are easily collected and accessed in smartphones. These become a powerful combination in a smartphone where sensors are integrated, software can be inexpensive and overall a smartphone based system eliminates extra devices.

Our Approach

In our overall approach, we use the smartphone to collect and then combine simple body motor activity, time and location variables to predict the complex functional activity. With smartphone data collection, activities can be performed indoors or outdoors. While outside we can use Global Positioning System (GPS) for localization that is available in every smartphone. It is more challenging indoors. To recognize indoor complex activity we leverage Wi-Fi signals. Fundamentally, Wi-Fi is technically not very resolute as a location system. However, using smartphone (Android and iOS) and wireless routers we can identify the location of the user inside a building to a coarse degree and add this to the activity identification algorithm.

To predict the complex activity using a smartphone, we have divided our work into three different modules.

- Simple Body Motion Detection

- Indoor Localization

- Complex Functional Activity Detection

As previously described, complex activities are often composed of multiple simple activities. Thus, identifying and documenting simple body motions can be of benefit in identifying more complex activities. Thus, our first target was to classify and identify simple activities using smartphones. We also know that human activity is largely correlated to a location. For example we know that washing dishes occurs by the kitchen sink and grocery shopping occurs at the grocery store. Each of the modules of this system is described briefly in the following subsections.

Recognition of Simple Activities

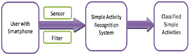

We will use a kinematic sensor of smartphone such as accelerometer, gyroscope, orientation sensor, or a magnetometer to classify different simple activities like walking, standing, running, jogging, walking upstairs and downstairs etc. Based on our previous experience we are going to filter the sensor data to reduce the noise. The filtered data then will be used to train the system. Later we will use the system to classify different simple activities. The architecture of the proposed system is shown in Figure 1.

Indoor Localization

We have created an intelligent ubiquitous system which is able to detect the location of the user both indoors and outdoors with a fair accuracy using Wi-Fi wireless technology [27]. For localization of a mobile node with a smartphone, we achieved less than 2 meters accuracy with an Android and less than 2.5 meters accuracy with an iPhone for both indoor and outdoor. We achieved this localization at low cost with existing Wi-Fi infrastructure. We note that this design can be used in indoors and outdoors. To localize a smartphone with a wireless router we achieved 80% accuracy for 5 out of 6 different locations with the existing Marquette University wireless routers. We achieved low accuracy (30% to 40%) for mobile nodes or WiFly routers.

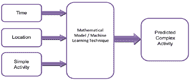

Recognition of Complex Activity

To pilot the identification of complex functional activities used time, location and simple activity data to predict the complex activity. The architecture of the proposed approach is shown in Figure 3.

Data collection

We collected data from 3 persons (2 men and 1 woman). The data include time of the activity, location where the activity is performed, duration of the activity, and the performed activity. We collected data from two of the subjects for 21 days and data from the other subject for 14 days. The data for 1 single day is shown in table I. The first column indicates the time when the activity was performed. The second column stores location data, with two parts; the room inside the apartment or house, and the more precise location inside the room with respect to different object activity anchors such as bed, sofa, chair etc. The third column lists the activity that was performed. The fourth column stores the duration of the performed activity.

Data Collection Tool (UbiSen)

Time |

Location |

Activity |

Duration (hour : minute) |

|

|---|---|---|---|---|

Room |

Anchor |

|||

7:00 |

Bedroom |

Bed |

Wake up |

0:03 |

7:03 |

Bathroom |

Toilet |

Using the toilet |

0:07 |

7:10 |

Kitchen |

Throughout kitchen |

Food preparation |

0:19 |

7:29 |

Porch |

Chair |

Eating |

0:34 |

8:03 |

Kitchen |

Sink |

Clean dishes |

0:06 |

8:09 |

Bathroom |

Sink |

Personal cares/ hygiene |

0:23 |

8:32 |

Bedroom |

In front of dresser/closet |

Changing clothes |

0:17 |

8:49 |

Front door |

Front door |

Leaving |

3:31 |

12:20 |

Front door |

Front door |

Returning home |

0:01 |

12:21 |

Kitchen |

In front of refrigerator |

Food preparation |

0:19 |

12:40 |

Dining room |

Table |

Eating |

0:20 |

13:00 |

Living room |

Chair |

Resting |

0:30 |

13:30 |

Bathroom |

Toilet |

Using the toilet |

0:05 |

13:35 |

Living room |

Chair |

Watching TV |

0:45 |

14:20 |

Kitchen |

Refrigerator |

Getting a snack |

0:03 |

14:23 |

Living room |

Chair |

Watching TV |

1:07 |

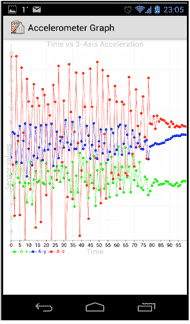

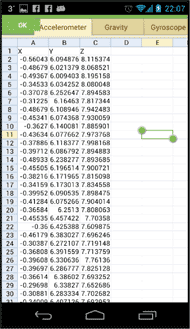

We developed a prototype tool named UbiSen (Ubicomp Lab Sensor Application) in Android to collect sensor data for simple activity recognition to help us to predict complex activity. It collects data from all smartphone available sensors simultaneously. We have used a threading technique to parallelize the operation so that we obtain more precise data on each timestamp. We plan to use this tool to generate a data set for different activity in a controlled environment. The developed tool is generic and it can be used to collect data from a specific set of sensors for a specified frequency. We also plan to add the statistical function enabling the user to perform statistical operations and generate meaningful reports from the collected sensor data. The functionality of the tool is shown in Figure 4-6.

conclusion

To reach the ultimate goal of complex activity recognition, we have developed a tool for collecting and reporting sensor data from a smartphone. We have also collected a data set for complex activity. In addition, we developed an indoor localization system using Wi-Fi and a smartphone. These key R&D steps have helped us advance toward our goal of the design and of a complex functional activity recognition system on smartphones. Future work will aggregate and integrate these data sets using machine learning algorithms and test the accuracy of complex functional activity prediction that uses just the mobile phone and .existing standard Wi-Fi infrastructure.References

- Fox Dieter, Location-Based Activity Recognition, Advances in Artificial Intelligence 2007, Volume 4667/2007, 51, DOI: 10.1007/978-3-540-74565-5_6

- IDC Worldwide Mobile Phone Tracker, Jan 24, 2013

- T. B. Moeslund, A. Hilton, and V. Kruger, “A survey of advances in vision-based human motion capture and analysis,” Computer Vision and Image Understanding, vol. 104, no. 2, pp. 90–126, 2006.

- J. K. Aggarwal and Q. Cai, “Human motion analysis: A review,” Computer Vision and Image Understanding, vol. 73, no. 3, pp. 428–440, 1999.13

- G. Johansson, “Visual perception of biological motion and a model for its analysis,” Perception and Psychophysics, vol. 14, no. 2, pp. 201–211, 1973.

- J. R. Kwapisz, G. M. Weiss, and S. A. Moore, “Activity recognition using cell phone accelerometers”. In Proceedings of the Fourth International Workshop on Knowledge Discovery from Sensor Data, Washington DC, pp10-18, 2010.

- J. Yang, “Toward physical activity diary: Motion recognition using simple acceleration features with mobile phones”, In First International Workshop on Interactive Multimedia for Consumer Electronics at ACM Multimedia, 2009.

- T. Brezmes, J. L. Gorricho, J. Cotrina, “Activity Recognition from accelerometer data on mobile phones”, In IWANN Proceedings of the 10th International Conference on Artificial Neural Networks, pp.796-799, 2009.

- G. Hache, E. D. Lemaire, N. Baddour, "Mobility change-of-state detection using a smartphone-based approach," In Proceedings of International Workshop on Medical Measurements and Applications, pp.43-46, 2010.

- S. Zhang, P. J. McCullagh, C. D. Nugent, and H. Zheng, “Activity Monitoring Using a Smart Phone’s Accelerometer with Hierarchical Classification”. In Proceedings of the 6th International Conference on Intelligent Environments, pp. 158-163, 2010.

- E. M. Tapia, S. S. Intille, and K. Larson, “Activity Recognition in the Home Setting Using Simple and Ubiquitous Sensors,” Proceedings of PERVASIVE, pp. 158-175, 2004. L. Bao, S. S. Intille, “Activity Recognition from User-Annotated Acceleration Data,” Pervasive Computing, LNCS 3001, pp. 1-17, 2004.

- N. Ravi, N. Dandekar, P. Mysore, and M. L. Littman, “Activity Recognition from Accelerometer Data,” Proceeding of the National Conference on Artificial Intelligence, vol. 20, PART 3, pp. 1541-1546, 2005.

- Levasseur M, Desrosiers J, St-Cyr Tribble D: Do quality of life, participation and environment of older adults differ according to level of activity? Health and Quality of Life Outcomes 2008; 6(1): 30.

- Molzahn A, Skevington SM, Kalfoss MSM, K: The importance of facets of quality of life to older adults: an international investigation. Quality of Life Research 2010; 19: 293-298.

- Bonder BR, Bello-Haas VD: Functional Performance in Older Adults, Vol. 3: F.A. Davis Company, 2009.

- Vest MT, Murphy TE, Araujo KLB, Pisani MA: Disability in activities of daily living, depression, and quality of life among older medical ICU survivors: a prospective cohort study. Health and Quality of Life Outcomes 2011; 9(9): 9-18.

- Johnston MV, Mikolos CS: Activity-related quality of life in rehabilitation and traumatic brain injury. Archives of Physical Medicine and Rehabilitation 2002; 83(12): s26-s38.

- Bowling A, Seetai S, Morris R, Ebrahim S: Quality of Life among older people with poor functioning. The influence of perceived control over life. Age Ageing 2007; 36(3): 310-315.

- Friedman B, Lyness J, Delavan R, Li C, Barker W: Major Depression and Disability in Older Primary Care Patients with Heart Failure. Journals of Geriatric Psychiatry and Neurology 2008; 21(2): 111-122.

- Marquardt G, et al.: Association of the Spatial Layout of the Home and ADL Abilities among older adults with Dementia. American Journal of Alzheimer's Disease & Other Dementias 2011; 26(1): 51-57.

- Young A: Exercise physiology in geriatric practice. Acta Medica Scandinavica 1986; 711: 227-232.

- Hagerstand T: What about people in regional science? Papers of the Regional Science Association 1970; 24: 7-21.

- Corbett J: Torsten Hagerstand:Time Geography. vol 2011, 2001.

- Elgethun K, Fenske RA, Yost MG, Palcisko GJ: Time-Location Analysis of Exposure Assessment Studies of Children Using a Novel Global Positioning System Instrument. Enviormental Health Perspectives 2003; 111(1).

- Gelatt TS, Sniff DB, Estes JA: Activity patterns and time budgets of the declining sea otter population at Amchitka Island. Journal of Wildlife Management 2002; 66(1): 29-39.

- Miller HJ: Activities in Space and Time: Pergamon/Elsevier Science Handbook of Transport: Transport Geography and Spatial Systems).

- Md Osman Gani, Casey O’Brien, Sheikh I Ahamed, Roger O Smith, RSSI based Indoor Localization for Smartphone using Fixed and Mobile Wireless Node, Proceedings of the 2013 IEEE 37th Annual Computer Software and Applications Conference, 110-117, Kyoto, Japan, July 2013.

Audio Version PDF Version