A Stimulus-Response Model Of Therapist-Patient Interactions In Task-Oriented Stroke Therapy Can Guide Robot-Patient Interactions

Michelle Johnson, PhD1,2,3, Mayumi Mohan, MS2,3, Rochelle Mendonca OTR, PhD4.

1Physical Medicine and Rehabilitation, 2Rehabilitation Robotics Lab, 3GRASP, University of Pennsylvania, Philadelphia, PA

4Occupational Therapy, Temple University, Philadelphia, PA

ABSTRACT

Current robot-patient interactions do not accurately model therapist-patient interactions in task-oriented stroke therapy. We analyzed patient-therapist interactions in task-oriented stroke therapy captured in 8 videos. We developed a model of the interaction between a patient and a therapist that can be overlaid on a stimulus-response paradigm where the therapist and the patient take on a set of acting states or roles and are motivated to move from one role to another when certain physical or verbal stimuli or cues are sensed and received. We examined how the model varies across 8 activities of daily living tasks and map this to a possible model for robot-patient interaction.

INTRODUCTION

By 2030 about 10.8 million older adults will be living with disability due to stroke. Providing good quality of life for these older adults requires maximizing independent functioning after a stroke. Robots can play a unique role in supporting independent living and stroke rehabilitation in non-traditional settings while retraining for physical function (Costandi, 2014; Loureiro 2011; Matarić, 2007). Robots can act as social agents, demo a task, invite patients to engage in therapeutic exercise, guide the exercise activity with behaviors designed to make exercise more enjoyable and monitor the patients’ movements (Brooks 2012; Fasola, 2012). This evidence suggests it is appropriate to consider robots as an advanced tool to be used under the therapist's direction – a tool that can implement repetitive and labor-intensive therapies (Mehrholz, 2012). Ideally, we envision scenarios where the therapist shows the task to the robot and the robot can perform the task with the patient while the therapist oversees the therapy.

We explore human-human interaction to better model human-robot interaction for task-oriented stroke therapy. In stroke therapy, most rehabilitation robots are either fully hands off or hands on therapy robots and most do not move easily between contact with patient or non-contact with patients as therapists do (Sawers, 2014). Thus, we are interested in developing robots that can dynamically come into contact and end the contact with a patient by themselves. For a robot to be able to do this it requires an in-depth understanding of interactions seen in therapist-patient dyads. There is also a need to better understand what therapists’ behaviors are critical to motor relearning. Physical behaviors usually proceed or are followed by verbal behaviors and it is suggested combinations of behaviors form the basis for eliciting motor re-learning after stroke.

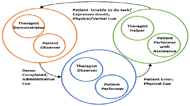

In this paper, we analyze therapist-patient interactions in task-oriented stroke therapy captured in 8 videos. We assumed the interaction between a patient and therapist can overlay on a stimulus-response paradigm where the therapist and patient take on a set of acting states roles and are motivated to move from one role to another when certain physical or verbal stimuli or cues are sensed and received. We develop this model of therapist-patient interactions and examine how the model varies across 8 activities of daily living tasks. We identify key cues resulting in role changes and determine whether the roles, observer, demonstrator and helper are key roles a therapist cycles through during a stroke therapy encounter for any task. Finally, we present a high-level model for robot-patient interactions in task-oriented therapy.

MODELING Patient-Therapist Interactions IN OCCUPATIONAL THERAPY AFTER STROKE

A typical method in Artificial Intelligence is to make robots model human actions using stimulus-response methods implemented as state-based control. A stimulus-response paradigm (Arkin, 1998) is the change in the state of a system based on a cue or stimulus sensed by the system resulting in a response which may entail changing from or remaining in a given state. Behavior-based robotics, a complex solution for modeling social robots (Matarić, 1999), is a form of "functional modeling which attempts to synthesize biologically inspired behavior." Our goal is to overlay this model on a upper limb therapy session for patients with stroke.

A scenario may flow as follows: the therapist is in a demonstrator role when he/she is explaining the task or clarifying any task-relates queries that the subject may have. The patient remains in an observer role during that period. Once the demonstration is completed the patient moves into the performer role and begins to perform the task while the therapist moves into an observer role. If a physical or verbal cue is received such as if the patient makes an error in doing the task, the therapist moves into a helper role and enables the patient to then perform the task with support. A change in role occurs due to a physical or verbal cue.

| PHYSICAL CUES | VERBAL CUES | ||

|---|---|---|---|

| Therapist | Patient | Therapist | Patient |

| Reaches | Does not reach | Supports/ Expresses Agreement Understanding or Willingness | Supports |

| Grips | Does not grip | Requests/ Asks | Requests/ Asks |

| Moves | Does not coordinate | Commands | Complains/ Disagrees |

| Lifts | Does not move | States | Describes/ Explains/ States |

| Transports | Does not lift | Corrects | |

| Stabilizes | Does not transport | Stops/ Prevents | |

| Guides | Does not stabilize | Admin Cues | |

| Points | Does not initiate | Therapist | Patient |

| Touches | Points | Start Demonstration | Begin Task |

| Nods | End Demonstration | End Task | |

| Manipulates | |||

METHODS

Eight videos examples of occupational therapy sessions for the following Activities of Daily Living: shoe shining, cleaning dishes, making iced tea, making a sandwich, arranging flowers, washing a car, sweeping a sidewalk, and shaving were used. The videos were obtained from the International Clinical Educators Inc. Video Library (ICE, 2017) with permission. Using the model presented in figure 1 and the cues identified in Table 1, two therapists independently coded the set of 8 videos using the Multimedia Video Task Analysis (MVTA) software (Radwin, 2005). The coder assigned a role to the patient and therapist and identified the timing and type of cue that acted as a stimulus for a change in the role. Fig. 3 shows a sample video being coded using MVTA.

![]() (Cronbach, 1951) to determine coder agreement for the duration and frequency of physical cues, verbal cues and roles. It is important to note that the cues that were being coded are cues that caused role changes in the therapist. We examined the following hypotheses to determine whether these roles and cues were present across these tasks:

(Cronbach, 1951) to determine coder agreement for the duration and frequency of physical cues, verbal cues and roles. It is important to note that the cues that were being coded are cues that caused role changes in the therapist. We examined the following hypotheses to determine whether these roles and cues were present across these tasks:

- Therapist will spend time in all roles.

- Role changes will be caused by a cue initiated by either the patient or therapist.

Results and Discussion

The coders were consistent in identifying roles and cues. Cronbach alpha values for roles and physical and verbal cues varied from ![]() to

to ![]() for duration and frequency respectively.

for duration and frequency respectively.

Therapist spent time in all three roles. The demonstrator role was the least used and this may have been due to the fact that the videos were taken after the therapist had explained the task. Therapists spent more time in the helper role (52%), which was especially true when the patient was low functioning. Correspondingly, if the patient was high functioning, then the therapist spent more time in the observer role (41.41%). Additionally, the frequency and durations of the therapist and patient roles correlated. The therapist spent the least amount of time in the demonstrator role (6.58%) and the patient spent least amount of time in the observer role (6.77%). The therapist demonstrated the task in the beginning or if clarification was required.

Role changes were indeed caused by cues. Out of the 11 physical cues, the reaches, lifts and stabilizes cues were the main ones that caused therapist role changes. Reaches, lifts and stabilizes had a mean frequency of 37.5%, 37% and 41.5% respectively. The stabilizes cue was used when patients required physical support to perform the task. Reaches had a higher mean frequency and shorter duration than lifts. This was expected as the reach movement by the therapist is typically quick. The remaining physical cues are those that can be considered patient errors that required therapist intervention and led to role changes.

The supports, requests/ asks, commands and states verbal cues had high frequencies of 59.5%, 38.5%, 30% and 59.5% respectively. The cue states is a statement that tells the patient to initiate, continue or complete a task without giving specific instructions. For example, "try another way". The supports cue is used for encouragement. Of the 4 verbal cues by the patient, describes/ explains/ states had the highest frequency. These occurred when patients were clarifying the task or explaining his/her actions and understanding of the task.

CONCLUSION AND FUTURE WORK

The stimulus-response model appears to be able to model the relationships observed between patient and therapist in a variety of daily living tasks and presents a reasonable model for robot-patient interactions that may more closely approach real therapy. Although, the data of cues and roles presented were specific to the tasks evaluated and the patients involved in this study, we anticipate that given new tasks and patients, the overall interaction scheme proposed would remain the same, but the % of time spent in roles would change depending on the level of impairment of the patient or the specific task. The robot would still need to dynamically switch between the three roles based on the cues and feedback from its sensors. Our next goals are to develop these motion capture tools to enable the robot to be taught the cues and when to change roles.

References

Arkin, R. C. (1998). Behavior-based robotics. MIT press.

Brooks, D. A., & Howard, A. M. (2012). Quantifying upper-arm rehabilitation metrics for children through interaction with a humanoid robot. Applied Bionics and Biomechanics, 9(2), 157-172.

Costandi, M. (2014). Rehabilitation: Machine recovery. Nature, 510(7506), S8-S9.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297-334.

Fasola, J., & Mataric, M. J. (2012). Using socially assistive human–robot interaction to motivate physical exercise for older adults. Proc. of IEEE, 100(8), 2512-2526.

International Clinical Educators(ICE) (n.d.). ICE Video Library. Retrieved January 12, 2017, from http://www.icelearningcenter.com/ice-video-library

Loureiro, R. C., Harwin, W. S., Nagai, K., & Johnson, M. (2011). Advances in upper limb stroke rehabilitation: a technology push. Med & bio engr & computing, 49(10), 1103-1118.

Mataric, M. J. (1999). Behavior-based robotics. MIT encyclopedia of cognitive sciences, 74-77.

Matarić, M. J., Eriksson, J., Feil-Seifer, D. J., & Winstein, C. J. (2007). Socially assistive robotics for post-stroke rehabilitation. JNER, 4(1), 1.

Mehrholz, J., Hädrich, A., Platz, T., Kugler, J., & Pohl, M. (2012). Electromechanical and robot‐assisted arm training for improving generic activities of daily living, arm function, and arm muscle strength after stroke. The Cochrane Library.

Radwin, R. G., & Yen, T. Y. (2005, September 19). Multimedia Video Task Analysis (MVTA). Retrieved January 12, 2017, from https://mvta.engr.wisc.edu/

Sawers, A., & Ting, L. H. (2014). Perspectives on human-human sensorimotor interactions for the design of rehabilitation robots. JNER, 11(1), 1.

Acknowledgements

The Department of Physical Medicine and Rehabilitation at the University of Pennsylvania funded this work. We also want to thank our coders, Lucas Adair and Sarah Laskin.