THE "AWARE-SYSTEM" - PROTOTYPING AN AUGMENTATIVE COMMUNICATION INTERFACE

ABSTRACT

Approximately 500,000 persons worldwide suffer from a condition known as locked-in syndrome. Locked-in persons are cognitively intact, but have little or no ability to move, making it impossible to use traditional assistive technologies. New medical technology, using methods of controlling a computer from brain signals, is providing hope for such individuals. The "Aware System" is one part in a large body of work that examines the use of electroencephalogram (EEG) technology to manipulate an environmental control and communication system. Though much work has been done on augmentative communication systems for the disabled, the Aware System is different in that it is informed by environmental context and it uses neural signals as input (1).

BACKGROUND

The term "locked-in syndrome" describes half a million people who are intelligent and alert, but completely paralyzed and unable to communicate; essentially prisoners in their own bodies. Many suffer from severe progressive disabilities such as quadriplegia or amyotrophic lateral sclerosis (ALS, or Lou Gehrig's disease). Brain Computer Interfaces (BCIs) can allow these people to communicate and control their environment for greater health and comfort. Potential future applications include education and recreation via the Internet, and direct brain control of prosthetics or muscle stimulators that could restore movement in paralyzed limbs.

Several methods of controlling a computer from brain signals have been developed. One technique uses electrodes implanted directly into the brain (2) allowing signals to be intercepted and interpreted in order to control a computer. Other techniques involve using electroencephalogram (EEG) technology to detect thresholds in temporal patterns in brain signals (3)(4). Because of the non-invasive nature of the EEG method, we are focusing our work on this technique.

STATEMENT OF THE PROBLEM

Currently there are significant limitations with neurally-controlled communication systems for patients that cannot move any parts of their body. Research indicates that such users prefer to use their original language rather than an alternative symbol system (5). Creating a system that incorporates users' natural language can be a challenge because of the need both speed and accuracy. The average rate for a normal speech-based conversation is between 150-200 wpm, while assistive communication systems can typically attain rates of less than 15 wpm (6). Adding the challenge of neural-based control further complicates this picture due to the high error rate and low bandwidth nature of brain-controlled interactions (7); the average speed of a neurally-controlled speller is three letters per minute (8). The objective of the Aware System is to develop an application that combines context-sensitive language prediction and brain-controlled interactions in a usable interface to improve the communication methods currently available to locked-in patients.

RATIONALE

Four factors were considered important when designing the Aware System interface: speed, accuracy, context, and the mood of the patient. Speed is important because of the detrimental effects of silence on the listener's attitude to the speaker (6). Accuracy is important due to the strong desire of patients for free-form communication; users often prefer to struggle in order to be specific with communication despite the slow rate problem (6). Context of a conversation, such as who is in the room, is important because it can help to narrow the word and sentence selection options provided by the augmentative system. Past communications research with a locked-in patient using eye blinks for "yes" and "no," has shown that style and content of interactions vary according to the role of the person with whom the user is communicating (1). Mood can be an important indicator of word selection and sentence structure, and offers the nonspeaker a way to retain a sense of self. Users of the Conversation Helped by Automatic Talk (CHAT) system developed by Alm, Arnott, & Newell reported back that they felt personalities were conveyed when using the CHAT system, because CHAT alters words and phrases in correlation with a users' chosen mood (6).

DESIGN

The design of the Aware System has evolved over several years of research into locked-in users' needs, and incorporates communication, environmental control, and affective capabilities. Brain signals are acquired and translated, and the system passes this information to the interface.

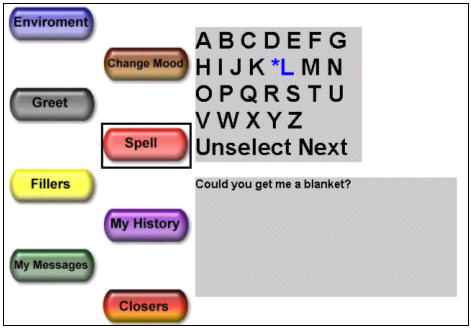

The interface design of the Aware System takes into account the different forms of communication that a user may need. Buttons are arranged vertically, in a zigzag formation down the screen to help prevent accidental selection.

|

|

The first button on the screen is "environmental control" which the user can select to manipulate objects in the environment, such as a TV. The second button allows the selection of a mood, such as "happy," or "formal." The next series of buttons are positioned in the natural sequence of conversation. Research in the field of dialogue design has shown that most conversation occurs in a predictable order, starting with "openers" going on to topic discussion, ending with "closers," with "fillers" in between to denote continued attention on the part of the listener (6). |

The "My History" option allows users to store conversational topics per visitor, and "My Messages" allows the user to store narratives for later use. The Aware spelling system uses a method similar to cogeneration (5) that allows for free-form conversation, but assists the user with word and sentence prediction. For example, a user selection of the letters "bl" would predict "blanket," "blue," etc. Based on the word selected, the system then predicts a sentence based on contextual information such as who is in the room, time of day, history, and user habits. The user may bypass all predictive features to form a unique phrase or sentence when necessary.

DEVELOPMENT

The Aware System prototype was developed in Macromedia Dreamweaver 4.0 and simulates several key functional aspects of the system such as spelling words and creating sentences. The system is currently in the process of being developed in Borland C++ 6.0 in order to facilitate seamless integration with the Brain Computer Interface 2000 system (BCI 2000) (4), which acquires and translates the neural signals for the Aware applications.

EVALUATION

Currently, several small studies are taking place to evaluate different factors of the system.

One study is taking place to determine the navigational paradigm for the system: autoscan or logical control. The autoscan navigation uses a black box cursor that moves over the selection buttons and the participant signal is used as a select. Logical control uses the signal to move the cursor over each selection button, and a selection is made when no signal is sent after a fixed amount of time. The first round of evaluation will occur with five able bodied participants testing the brain-controlled interface with each navigational paradigm, and then in situ with locked-in subjects.

Another study taking place is a language study regarding popular topics and communication methods between locked-in patients and visitors in order to determine the best core vocabulary for the system. Based upon the results of these studies, further work will be done to evaluate the Aware System interface itself, such as testing to determine the optimum number of items in a menu.

CONCLUSION

The Aware System prototype was designed to create a vision for a system that assists locked-in patients by allowing them to manipulate their environment and communicate using neural control, enhanced by context-sensitive prediction. The interface design incorporates several interaction styles to assist with the speed and accuracy of patient communication. The goal for the system is for users to have the ability to seamlessly integrate the different modes of interaction, so that they may easily transition from a greeting, to the spelling of a word, to the completion of a sentence, while conveying mood and personality.

REFERENCES

- Carroll, K., Schlag, C., Kirikci, O. & Moore, M. (2002). Communication by Neural Control. Extended Abstracts on Human Factors Computer Systems, CHI '02.

- Kennedy, P., Bakay, R., Moore, M., Adams, K., and Goldthwaite, J. (2000). Direct Control of a Computer from the Human Central Nervous System. IEEE Transactions on Rehabilitation Engineering, Vol 8, No. 2.

- Mason, S. G. & Birch, G. E. (2000). A Brain-Controlled Switch for Asynchronous Control Applications. IEEE Trans Biomed Eng, vol. 47, no. 10, 1297-1307.

- Wolpaw, J.R., McFarland, D.J., and Vaughan, T.M. (2000) Brain-computer interface research at the Wadsworth Center. IEEE Trans. Rehabil. Eng. 8:222-226.

- Copestake, A. (1996). Applying Natural Language Processing Techniques to Speech Prostheses. Working Notes of the 1996 AAAI Fall Symposium on Developing Assistive Technology for People with Disabilities.

- Alm, N., Arnott, J. L. & Newell, A. F. (1992). Prediction and conversational momentum in an augmentative communication system. Communications of the ACM, 35(5), 46-57.

- Mankoff, J., Dey, A. & Moore, M. (2002). Web Accessibility for Low Bandwidth Input. In Proceeings of ASSETS 02, ACM Press, Edinborough, Scotland.

- Wolpaw, J. R., N. Birbaumer, et al. (2002). Brain-computer interfaces for communication and control. Clinical Neurophysiology 113(6): 767-791.

ACKNOWLEDGMENTS

We are grateful to our research sponsor, the National Science Foundation IIS Universal Access program.

Lori

K. Adams

835 Berne Street,

Atlanta, GA 30316

phone: 404 627-6210,

email: lorikristin@yahoo.com