EYE GAZE TRACKING / ELECTROMYOGRAM COMPUTER CURSOR CONTROL SYSTEM FOR USERS WITH MOTOR DISABILITIES

ABSTRACT

Eye Gaze Tracking (EGT) systems have been used to provide computer cursor control to individuals with motor disabilities (1). Involuntary movements of the eyes during a fixation limit the stability of this form of cursor control. An alternative system to step the cursor by detection of the contraction of selected facial muscles, through monitoring of the corresponding Electromyogram (EMG) signals, has been developed (2). The system presented here combines EGT and EMG monitoring to control the cursor, preserving the advantages and minimizing the shortcomings of each individual modality. Evaluation of the EGT/EMG prototype indicates a better performance than our previous EMG-only system and higher cursor stability than the EGT system.

BACKGROUND

Eye Gaze Tracking (EGT) systems, based on an infrared video camera that continuously captures images of the user's eye, have been used to provide computer cursor control for individuals with motor disabilities who cannot use a standard mouse (1). In these systems the computer cursor tracks the point of gaze (POG) of the user, estimated by the EGT system. However, two significant limitations have been identified in these systems: a) The unavoidable shifts of the POG around a given fixation point result in a jittery screen cursor, and b) Emulation of mouse "clicks" by detection of special events in the EGT system (e.g., dwelling of the POG on a particular area of the screen or winking of the eye), tend to result in frequent artifactual clicks. Both these limitations prompted us to investigate alternative mechanisms to give cursor control capability to computer users with motor disabilities. Since many of these users retain control over their facial muscles, our group targeted the contraction of certain facial muscles not significantly involved in common activities (e.g., speech generation) as the form of user activity to drive an alternative cursor control interface. Our EMG-based cursor control system uses real-time spectral analysis of three EMG signals to command cursor steps in the LEFT, RIGHT, UP and DOWN directions. The EMG signals are obtained through surface electrodes placed on the left and right temples of the user, and on his/her forehead. A unilateral clenching of the left side of the mandible (by contraction of the left Temporalis muscle) is identified and converted by the system into a cursor step to the left. Similarly, right clenching of the mandible and raising or lowering the eyebrows result in cursor steps in the right, up and down directions, respectively. Detailed information on the design and evaluation of the EMG-only cursor control system has been presented elsewhere (2). In summary, the EMG-based system resulted in a steady cursor and a reliable clicking mechanism, allowing fine small adjustments in cursor position, but requiring larger time intervals for long excursions, due to the stepping nature of the approach used. This paper addresses the fusion of the EGT- and EMG-based cursor control systems into a "hybrid" unit, which switches effective modalities as needed.

STATEMENT OF THE PROBLEM

This project pursued the integration of an EGT-based system with our EMG-based system, towards the development of a "hybrid" EGT/EMG cursor control system that switches between effective modalities by estimating the context in which the user is providing one or both forms of input. The project also pursued a comparative evaluation of the "hybrid" system, with respect to our EMG-only system.

RATIONALE

We propose that while the user is involved with a small neighborhood of the screen, around the current position of the cursor, (Cx(n),Cy(n)) the EMG-based control should take precedence and the EGT-control should be disabled. In this way, the cursor will remain static unless the user performs muscle contractions to command short, steady displacements or a "click" operation. On the other hand, if the user intends to interact with areas of the screen that are at a considerable distance from the current position of the cursor, he/she will first direct his/her gaze to that section of the screen. It is at this moment that the intent of the user should be detected to switch the effective control of the cursor to EGT-based control, which can quickly re-position the cursor at the current location of the user's point of gaze. For this context-switching approach the key measurement is what we called "POG drive", which is the instantaneous distance between the current estimated point-of-gaze and the previous cursor position.

DESIGN

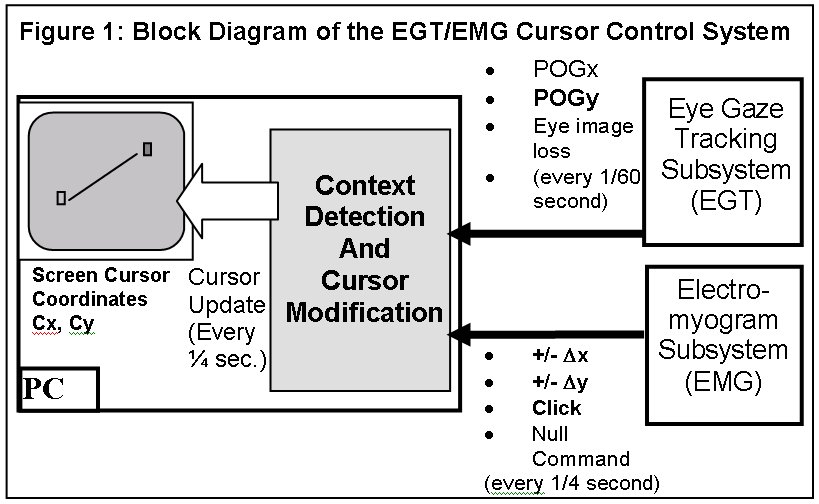

The decision as

to which modality (EGT or EMG) should effectively control

the position and state of the screen cursor is implemented

based on the calculation of the POG drive, as follows: ![]() .

Only if POG_drive > R, where R is a customizable threshold

(e.g., 80 pixels), the output from the EGT subsystem is used

to instantaneously update the position of the screen cursor:

Cx(n) = POGx(n), and Cy(n) = POGy(n). Otherwise the position

of the screen cursor is updated incrementally (if any), according

to the output from the EMG subsystem: Cx(n) = Cx(n-1) + Δx(n-1),

Cy(n) = Cy(n-1) + Δy(n-1). (See Figure 1).

.

Only if POG_drive > R, where R is a customizable threshold

(e.g., 80 pixels), the output from the EGT subsystem is used

to instantaneously update the position of the screen cursor:

Cx(n) = POGx(n), and Cy(n) = POGy(n). Otherwise the position

of the screen cursor is updated incrementally (if any), according

to the output from the EMG subsystem: Cx(n) = Cx(n-1) + Δx(n-1),

Cy(n) = Cy(n-1) + Δy(n-1). (See Figure 1).

DEVELOPMENT

|

The system was implemented according to the block diagram shown in Figure 1, where the "Context Detection and Cursor Modification" block behaves as indicated in the DESIGN section of this paper

EVALUATION

The performance of the "hybrid" EGT/EMG system was compared to our EMG-only system. The evaluation protocol consisted of a target selection task for which a Start Button was presented to the user in one corner of a 17" (43.18 cm in diagonal) monitor screen. The dimensions of the Start Button were always 8.5 x 8.5 mm. The protocol also showed the user a Stop Button, always at the center of the screen. There were four sizes for this target: 8.5 x 8.5 mm; 12.5 x 12.5 mm; 17 x 17 mm; and 22 x 22 mm. Before the beginning of each trial the cursor was placed for the user at the Start Button. Then the subject was asked to use the cursor control system under test to a) Click on the Start Button, to start a timer, b) Move the cursor towards the Stop Button, following any trajectory, and c) Click on the Stop Button, to stop the timer. At the end of each trial the time, in seconds, taken by the user to complete the task was displayed and logged into a database.

Each test session consisted of 20 trials with each Stop Button size, for a total of 80 trials. Prior to each trial the Start Button was placed by the program on one of the four corners of the screen, at random. Two groups of six college-aged volunteers performed the evaluation protocol for each of the two cursor control systems (EMG and EGT/EMG).

RESULTS AND DISCUSSION

|

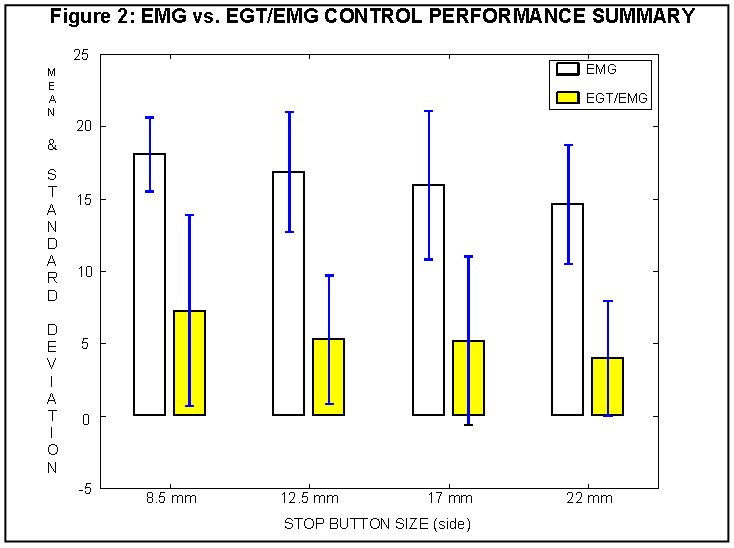

Figure 2 shows the performance for the two systems, grouped by Stop Button size. For each size the average of the 120 trials (6 subjects, 20 trials each) executed with a given system is represented by the height of a bar. In each case the empty bar represents the average time recorded when using the EMG system, while the filled bar represents average time with the EGT/EMG system, for a given Stop Button size. The vertical lines drawn through the center of the top of each bar extend +/-1 standard deviation measured from the same group of 120 recorded times as the corresponding mean.

Figure 2 indicates that the "hybrid" EGT/EMG system exhibited a significant reduction of the average times required to complete the experimental task, for all sizes of the Stop Button. The figure also shows, however, an apparent increase in the dispersion of the data points around the means (standard deviations that are larger, relative to their corresponding means). This may reflect the higher complexity of the multimodal system, which seems to call for a longer user training period. Nonetheless, analysis of the data as a 4 x 2 (Stop Button size x cursor control method) repeated measures mixed model design indicated significant differences (F(1,10)=64.54, p < 0.01) between treatments (i.e., between the use of the EMG-only and the EGT/EMG systems.

REFERENCES

- Lynch, WJ, "Hardware update: The Eyegaze Computer SystemTM", J. of Head Trauma Rehab., 1994; 9(4): 77-80.

- Barreto AB, Scargle SD, Adjouadi M, "A Practical EMG-based Human-Computer Interface for Users with Motor Disabilities", J. of Rehabilitation Research & Development, 2000; 37(1): 53-63.

This work was sponsored by grants: NSF-EIA-9906600 and ONR-N00014-99-0952.

Armando

Barreto

FIU Electrical & Computer Engineering

10555 West Flagler Street, Room

EAS-3956,

Miami, FL, 33174

305-348-3711,

348-3707 (fax),

barretoa@fiu.edu