INTELLIGENT VOICE CONTROL OF ELECTRONIC DEVICES

ABSTRACT

Many routine activities, such as obtaining weather forecasts, purchasing goods and services, making appointments, using e-government services, and navigating and interacting with indoor environments increasingly rely on various electronic devices with embedded operating systems, such as personal computers, laptops, personal digital assistants, and electronic aid to daily living (EADL) devices attached to wheelchairs. Access to these devices remains a major barrier for those who are blind or visually impaired and for people who do not have full use of their hands. The paper presents a technology that enables individuals with disabilities to control and interact with electronic devices through speech. The technology has been prototyped and evaluated in a virtual desktop assistant and a mobile robot simulating a wheelchair.

BACKGROUND

Auditory User Interfaces (AUIs) grew out of and extended the research on screen readers, software programs that allow visually impaired users to read the contents of GUIs by transmitting text to a speech synthesizer or a Braille display.

Mynatt (1) developed the Mercator AUI system to address the limitations of screen readers. Mercator is a framework for monitoring, modeling, and translating graphical user interfaces to applications without modifying the applications themselves. The human-computer interaction model advocated in Mercator is not hands-free: to navigate AUIs the user must use numeric keyboards, which makes the system inaccessible for people who do not have good use of their hands.

The Textual and Graphical User Interfaces for Blind People (GUIB) project (2) is aimed at providing blind and visually impaired individuals with transparent access to GUI-based applications. GUIB translates screen contents into a tactile and auditory representation that is based on the spatial organization of the graphical interface. Interacting with GUIB is also based on Braille displays.

Raman (3) addressed the limitations of screen readers by proposing a speech-enabling approach that bridged the gap between applications and their auditory interfaces. Raman viewed AUIs as an integral part of the applications to which they interface. He used this approach to implement Emacspeak, a full-fledged speech interface to the Emacs editor, which provided visually impaired users with access to Unix network resources. While Emacspeak audio-formats the text and augments it with auditory icons, it does not provide any natural language dialog capabilities.

RESEARCH QUESTION

The objective of this study was to research and prototype an intelligent voice control interface architecture that goes beyond AUIs in that it enables individuals with disabilities to control, interact with, introspect, and train electronic devices through speech. The stated objective examines the hypothesis that since language and action share the same cognitive mechanisms, electronic devices that have those mechanisms built into them are able to not only verbally communicate with their users but also to modify their behavior on the basis of verbal instructions (4).

|

METHOD

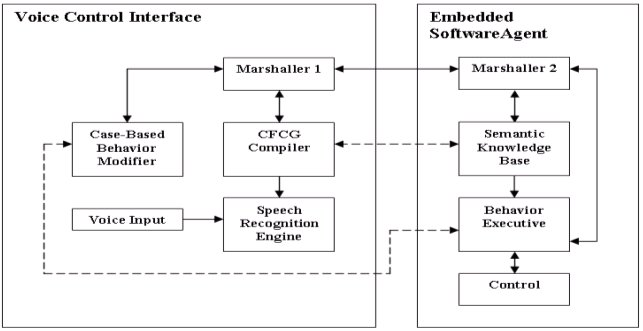

As shown in Figure 1, the architecture consists of two modules: the voice control interface (VCI) (left-hand side) and the software agent (right-hand side). The software agent is embedded into an electronic device. Voice inputs are fed into the system by the user through a microphone. The Speech Recognition Engine (SRE) maps voice inputs into goals to be satisfied by the Behavior Executive (BE). The BE is a software component that interfaces directly to the operating system of the device in which it is embedded.

A goal is given to the VCI Marshaller (Marshaller 1), which encodes it in a message and broadcasts it to the agent's Marshaller (Marshaller 2). The agent's Marshaller unmarshalls the message and installs it on the BE's goal agenda.

The Context-Free Command and Control Grammar (CFCG) compiler is the component responsible for constructing and maintaining the CFCGs for speech recognition. The compiler converts the Semantic Knowledge Base (SKB) into an equivalent set of CFCGs and manages the agent's conceptual memory. The compiler communicates with the BE either through the marshallers, if the VCI and the agent operate as two parts of a distributed system, or directly, if they run on the same hardware device. The Behavior Modification module modifies the agent's behaviors on the basis of user instructions.

RESULTS

Our experiments focused on evaluating the effectiveness of hands-free speech-based interaction between users and electronic devices with the intelligent voice control architecture. The two electronic devices used in the experiments were a virtual desktop assistant that manages files and email messages and a mobile robot that simulates a wheelchair. Two users interacted with these devices by wearing a headset that consisted of two headphones and a microphone.

The tests targeted four categories of interaction: introspection, understanding accuracy, problem solving, and behavior modification. Introspection is the ability of the system to explain its abilities to the user. Understanding accuracy refers to the percentage of user utterances understood by the system. Problem solving is the percentage of successfully completed tasks. Behavior modification is the ability of the system to modify its behavior on the basis of user instructions.

DISCUSSION

Analysis of experiments showed that the proposed architecture allows users to examine the capabilities of electronic devices through introspection and then use that knowledge to solve problems. Thus, the user does not need a sophisticated cognitive model of a given electronic device in order to start using it productively. The user can get to know the device's capabilities gradually. The experiments also demonstrated that speech recognition is a viable option for interacting with and controlling electronic devices. Consequently, speech recognition can become an integral part of controlling and interacting with various EADL devices (5).

The experiments demonstrated that behavior modification remains a challenge. To some extent, the lower percentages can be explained by the fact that the users did not expect this capability from an electronic device and did not quite know what to do with it.

The results of the project illustrate both the usefulness and current limitations of the intelligent voice control architecture. The architecture allows the users to go beyond Auditory User Interfaces in that the users can examine electronic devices through introspection and use the results of introspection to solve a variety of problems. However, more research is required to improve the behavior modification capabilities of the architecture. It is believed that the system should explain to the user not only what it can do but also how it can modify its behaviors. Future work will investigate a number of approaches to this problem.

REFERENCES

- Mynatt, E.D., & Weber, G. (1994). "Nonvisual Presentation of Graphical User Interfaces: Contrasting Two Approaches," Proceedings of the Conference on Computer-Human Interaction, Boston, MA, pp. 166-172.

- Weber, G. (1993). "Access by Blind People to Interaction Objects in MS Windows," Proceedings of ECART 2, Stockholm, Sweden, pp. 22-28.

-

Raman, T.V. (1997). Auditory User Interfaces, Kluwer Academic Publishers, New York.

-

Kulyukin, V.& Steele, A. (2002). "Instruction and Action in the Three-Tiered Robot Architecture," Proceedings of the International Symposium on Robotics and Automation, Toluco, Mexico, pp. 151-156.

- Blair, M., & Raymond, S.L.A. (2001). "Assistive Technology for Caregivers and the Elderly," Intermountain Aging Review, 3(1), pp. 10-14.

Vladimir Kulyukin

Department of Computer Science

Utah State University

4205 Old Main Hill

Logan UT 84322-4205

phone: (435) 797-8163

fax: (435) 797-3265

e-mail: vladimir.kulyukin@usu.edu