Kenneth R. Lyons and Sanjay S. Joshi

Department of Mechanical and Aerospace Engineering, University of California, Davis, CA

ABSTRACT

A fundamental problem for individuals with amputations proximal to the elbow is the lack of methods for controlling an upper limb prosthetic. Myoelectric control is limited in flexibility when few residual muscle sites are available, and noninvasive approaches to this problem are needed when surgical techniques such as targeted muscle reinnervation are not suitable. Controlling an upper limb prosthetic with the leg is one such approach. In this case study, we investigate recognition of foot gestures via surface electromyography using methods commonly employed in upper limb gesture recognition research. For comparison with the current standard in gesture recognition, electromyographic sensors recorded muscle activity from the forearm while participants performed gestures with the dominant hand. Then, participants performed an analogous set of foot gestures with sensors on the lower leg. Participants found the mapping between the hand and foot to be intuitive, and offline results show that classification accuracy for the two cases is comparable, motivating further work in applying this idea to upper limb prosthetic control.

INTRODUCTION

For individuals with transradial or more distal amputations, it is often possible to place electromyographic (EMG) sensors on residual muscle sites of the forearm for intuitive control of prosthetic wrist and hand functions (Scheme & Englehart, 2011; Roche et al., 2014) . When the amputation is proximal to the elbow, however, the situation is more difficult because of the lack of available muscle sites for control output. Targeted muscle reinnervation (TMR) is the only approach currently offering a truly intuitive control interface for high-level upper limb amputees, whereby nerves from the amputation site are surgically relocated to other muscles of the body, such as those in the chest. EMG sensors can then be used to measure muscle activations of the reinnervated muscles which, to the user, feel like movements of the missing limb. These “gestures” can then be classified for control of prosthetic arm functions (Kuiken et al., 2009).

In this work, we propose a noninvasive alternative to the surgical approach, where the amputee would control an upper limb prosthetic with analogous movements of the lower leg. The idea of controlling a powered upper limb prosthetic in this way is not new and has recently been successfully implemented with inertial measurement unit (IMU) sensors on both feet (Resnik et al., 2014b) for controlling the DEKA prosthetic arm and hand (Resnik et al., 2014a). Both feet are needed to control one prosthetic because the number of functions exceeds the number of control signals provided by one IMU alone; furthermore, all functions are not meant to be intuitive. In our work, we are aiming to keep all upper limb prosthetic control bound to one leg and for all leg movements to be analogous to arm movements in order to minimize cognitive load and training time required to operate the system. To our knowledge, this is the first application of EMG-based gesture recognition techniques to the leg for this purpose and the first use of surface EMG for recording extrinsic toe flexors and extensors.

In this case study, subjects performed hand gestures with EMG sensors placed on the forearm. Then, sensors were placed on the leg and subjects performed an analogous set of foot gestures while seated. All recordings were taken in a single session for each subject, and the data was analyzed offline. This is only the first step toward control of an upper limb prosthetic via EMG-based recognition of leg gestures, however the results are promising and motivate future work wherein subjects would control a simulated or actual prosthetic.

Figure 1: The gesture mapping created for this study. Subjects viewed the images of the hand gestures in both the arm and leg recording configurations. Images of the leg are for illustrative purposes only.

Figure 1: The gesture mapping created for this study. Subjects viewed the images of the hand gestures in both the arm and leg recording configurations. Images of the leg are for illustrative purposes only.BACKGROUND

As a preliminary task for the experiment, a mapping between the wrist/hand and ankle/foot was developed. The first part of this is a gesture mapping, which is intended to be intuitive assuming a body posture similar to that used while typing on a keyboard. The gestures chosen are shown in Figure 1, with analogous pairs indicated by arrows. The upper limb gestures selected represent most of the desirable functionality of a prosthetic wrist and hand, with the thumb extension (TE) gesture acting as a placeholder for any additional hand function such as key grip or fine pinch. The analogous foot gestures were chosen based on the alignment of the degrees of freedom of the wrist and ankle when the hand is placed in front of the body with palm face down and the foot is oriented in the standard anatomical position

In order to further strengthen the analogy between the leg and the arm, we also identified a set of muscles which have analogous primary actions on the corresponding limb. They are listed in Table 1 and are the muscles targeted by the EMG sensors in our experiment. While it is common in EMG-based gesture recognition research to place a ring of sensors circumferentially around the forearm due to amputation (Scheme & Englehart, 2011), the leg is in general not limited by this constraint, so we are able to record directly at applicable muscle sites.

METHODS

Subjects

The three subjects that participated in this study (two male, one female) were all undergraduate students at UC Davis between the ages 20–21 and were right-hand dominant. Subjects were informed of and consented to procedures approved by the Institutional Review Board at UC Davis (protocol #251192).

Experimental Protocol

The experiment consisted of a single session lasting approximately two hours. In the first part of the session, EMG sensors were placed on the dominant forearm and wrist while subjects performed the seven gestures shown in the top of Figure 1. Each recording trial consisted of the following sequence: two seconds of rest while viewing the prompted gesture, onset of the gesture, three seconds holding the position, then resting for four seconds before the start of the next trial. All gestures were performed three times per cycle in randomized order. Four cycles were run on the arm with approximately one minute of rest in between.

In the second part of the session, the sensors were removed from the arm and placed on the ipsilateral leg (Table 1). Subjects were shown the images of the hand gestures in Figure 1 and were asked to perform analogous foot movements. Before beginning the recording trials, any discrepancies between the subject’s interpretation of the analogous gestures and the gestures in the lower part of Figure 1 were verbally corrected (usually little correction needed if any) and it was ensured that subjects understood the mapping before proceeding. This process typically lasted about two minutes and the recording cycles for the foot gestures began immediately after. The same trial/cycle protocol applied to the foot gesture portion of the session.

Electrode Placement

Twelve disposable Ag/AgCl center snap electrodes (ConMed 1620) were placed in bipolar pairs on the muscles listed in Table 1 with approximately 2.5 cm inter-electrode distance. An additional electrode was placed on the olecranon in the arm recording setup and the medial malleolus in the leg setup, serving as a ground connection. The muscles with sensor location recommendations by SENIAM (Hermens et al., 1999) (i.e. gastrocnemius lateralis, tibialis anterior, peroneus longus) were positioned as recommended. For the other muscles, palpation was used to locate the muscle belly and a movable “EMG probe” with dry electrodes was used to identify a suitable recording site by asking the subject to perform various movements while monitoring the probe signal. For most of the muscles, this procedure was straightforward, however the extrinsic toe flexors/extensors were not as simple to isolate as they lie beneath other muscles. A compromise was found by incrementally moving the probe distally while the subject performed discriminatory movements (e.g. toe extension and dorsiflexion), trading off between crosstalk from the larger muscles associated with ankle movements and small signal amplitude from toe movements. After marking each recording site, the electrodes were placed and all signals were checked for quality before proceeding with the recording trials.

Data Collection and Analysis

| Arm | Leg | ||

|---|---|---|---|

| Muscle | Primary Action | Muscle | Primary Action |

| extensor carpi radialis longus | wrist extension | tibialis anterior | dorsiflexion |

| pronator teres | forearm pronation | peroneus longus | foot eversion |

| flexor carpi radialis | wrist flexion | gastrocnemius lateralis | plantarflexion |

| extensor pollicis longus | thumb extension | extensor hallucis longus | hallux extension |

| extensor digitorum | finger extension | extensor digitorum longus | lesser toe extension |

| flexor digitorum superficialis | finger flexion | flexor digitorum longus | lesser toe flexion |

A custom graphical user interface program was created for prompting subjects to perform the gestures and collecting the raw EMG waveform data. At the start of each recording trial, an image of a hand gesture from Figure 1 was shown, and a progress bar prompted the participant for gesture onset and offset. Six EMG channels were amplified by Motion Labs Systems Y03 differential EMG amplifiers (×300 gain, 100 dB CMRR, − 3 dB bandwidth from 15 Hz to 2 kHz), sampled at 8 kHz by a Measurement Computing USB-1608G data acquisition system (16-bit), and recorded directly to disk by the user interface program. Subsequent analysis was performed offline.

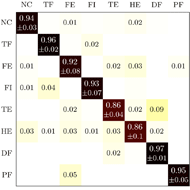

Signal conditioning included filtering with a fourth-order digital Butterworth bandpass filter with a 10–450 Hz passband then downsampling by a factor of 4 to 2 kHz. The filtered and downsampled signals were segmented into 150 ms windows with 50 ms overlap. The windows from 1–1.5 seconds (just before gesture onset) of each recording were used as instances of the rest class, and windows between 2–4 seconds (just after gesture onset and before gesture offset) were used as instances of the class corresponding to the gesture image prompt shown to the subject during that trial. Four time-domain features, introduced by Hudgins et al. (Hudgins et al., 1993), were extracted from each channel of the recording to form a 24-dimensional feature vector: mean absolute value (MAV), waveform length (WL), number of slope sign changes (SSC), and number of zero crossings (ZC). The linear discriminant analysis (LDA) classifier was chosen due to its simplicity, computational efficiency, and status as a standard with respect to which other classification methods were tested (Lorrain et al., 2011). For both the arm and leg configurations, classification accuracy was determined by a cross validation scheme in which two cycles were used for training the classifier and the remaining two were left for testing. Every possible combination was tested in this way and the total number of correct classifications for all combinations divided by the total number of testing instances produced the average classification accuracy.

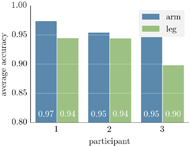

RESULTS

DISCUSSION

The results presented here demonstrate the feasibility of recognizing gestures of the lower leg and foot via surface electromyography. Participants found the mapping between the hand and foot intuitive and achieved classification accuracies that could be sufficient for prosthetic control; however further work is needed to test the usability of such a system explicitly. The next step is to develop an online system wherein the user views a prosthetic arm and receives visual feedback as to the accuracy of their movements. It has been reported that there is considerable variation in online task performance when offline classification accuracy is at or above 90% (Ortiz-Catalan et al., 2013), indicating that the small difference between hand and foot gesture classification accuracy reported here could be overcome by providing feedback to the subject. Furthermore, the foot and toe movements described in this paper are not performed as frequently as their hand and finger counterparts in daily life. Hence, with additional training and online feedback while performing the gestures, a user might be able to execute a task in the leg configuration just as well as with the arm setup.

While there are practical issues involved in using a foot gesture system as a basis for upper limb prosthetic control, Resnik et al. address many of these concerns and show that amputees are generally willing to use movements of the foot for control of a prosthetic arm and hand (Resnik et al., 2014b). One advantage of EMG over inertial measurement sensing is the possibility of using isometric contractions of the leg muscles to control the prosthetic. In addition, control outputs unique to EMG could be combined with IMU-based techniques to achieve increased capabilities for overall control.

REFERENCES

Hermens, H. J., Freriks, B., Merletti, R., Stegeman, D., Blok, J., Rau, G., … & Hägg, G. (1999). European Recommendations for Surface Electromyography. Roessingh Research and Development, Enschede.

Hudgins, B., Parker, P., & Scott, R. N. (1993). A New Strategy for Multifunction Myoelectric Control. IEEE Transactions on Biomedical Engineering, 40(1), 82–94. doi:10.1109/10.204774

Kuiken, T. A., Li, G., Lock, B. A., Lipschutz, R. D., Miller, L. A., Stubblefield, K. A., & Englehart, K. B. (2009). Targeted Muscle Reinnervation for Real-time Myoelectric Control of Multifunction Artificial Limbs. JAMA, 301(6), 619–628.

Lorrain, T., Jiang, N., & Farina, D. (2011). Influence of the training set on the accuracy of surface EMG classification in dynamic contractions for the control of multifunction prostheses. Journal of Neuroengineering and Rehabilitation, 8(25). doi:10.1186/1743-0003-8-25

Ortiz-Catalan, M., Brånemark, R., Håkansson, B. (2013). BioPatRec: A modular research platform for the control of artificial limbs based on pattern recognition algorithms. Source Code for Biology and Medicine, 8(11).

Resnik, L., Klinger, S. L., & Etter, K. (2014a). The DEKA Arm: Its features, functionality, and evolution during the Veterans Affairs Study to optimize the DEKA Arm. Prosthetics and Orthotics International, 38(6), 492–504. doi:10.1177/0309364613506913

Resnik, L., Klinger, S. L., Etter, K., & Fantini, C. (2014b). Controlling a multi-degree of freedom upper limb prosthesis using foot controls: user experience. Disability and Rehabilitation: Assistive Technology, 9(4), 318–329. doi:10.3109/17483107.2013.822024

Roche, A. D., Rehbaum, H., Farina, D., & Aszmann, O. C. (2014). Prosthetic Myoelectric Control Strategies: A Clinical Perspective. Current Surgery Reports, 2(3), 1–11. doi:10.1007/s40137-013-0044-8

Scheme, E., & Englehart, K. (2011). Electromyogram pattern recognition for control of powered upper-limb prostheses: State of the art and challenges for clinical use. Journal of Rehabilitation Research & Development, 48(6), 643–660.

ACKNOWLEDGEMENT

The authors would like to thank the subjects who participated in this study.