Assistive Robotic Manipulation Performance Evaluation between Manual and Semi-Autonomous Control

Hyun W. Ka, PhD1,2; Cheng-Shiu Chung, PhD1,2; Dan Ding, PhD1,2; Khara James, MS1; Rory Cooper, PhD1,2

1Human Engineering Research Laboratories, Department of Veterans Affairs, Pittsburgh, PA;

2Department of Rehabilitation Science and Technology, University of Pittsburgh, Pittsburgh, PAABSTRACT

We have developed a 3D vision-based semi-autonomous assistive robot arm control method, called AROMA-V, to provide intelligent robotic manipulation assistance to individuals with impaired motor control. A working prototype AROMA-V was built on a JACO robotic manipulator combined with a low-cost short-range 3D depth-sensing camera. In performing actual robotic manipulation tasks with the AROMA-V, a user starts operating the robot arm using an available manual control method (e.g., joystick, touch pad, or voice recognition). During the operation, when detecting objects within a set range, AROMA-V automatically stops the robotic arm, and provides the user with possible manipulation options through audible text output, based on the identified object characteristics. Then it waits until the user selects one by saying a voice command. Once the user feedback is provided, the AROMA-V drives the robotic arm autonomously until the given command is completed. In the lab trials conducted with five able-bodied subjects, the AROROMA-V demonstrated that it has the potential to enable users who have difficulty in using a conventional control interface. For the relatively simple tasks (e.g., turning a door handle, operating a light switch, and pushing a elevator switch) that do not require switching between different command mode, the AROMA-V was lower than the manual control. But, for the relatively complex tasks (e.g., knob-turning, ball-picking, and bottle-gasping) which require fine motion control, the AROMA-V showed significantly faster performance than the manual control.

INTRODUCTION

People with severe physical disabilities have found it difficult or impossible to independently use assistive robotic manipulators (ARM) due to their lack of access to the conventional control methods and the cognitive/physical workload associated with operating the ARMs (Maheu, Frappier, Archambault, & Routhier, 2011). To address this issue, several researchers have investigated vision-based autonomous control (Chung, Wang, & Cooper, 2013; Driessen, Kate, Liefhebber, Versluis, & van Woerden, 2005; Jiang, Zhang, & Wachs, 2014; Kim, Lovelett, & Behal, 2009; Laffont et al., 2009; Srinivasa et al., 2010; Tanaka, Sumi, & Matsumoto, 2010; Tijsma, Liefhebber, & Herder, 2005; Tsui, Kim, Behal, Kontak, & Yanco, 2011).

Some researchers attached an eye-in-hand camera (Driessen et al., 2005; Kim et al., 2009; Tanaka et al., 2010; Tijsma et al., 2005) to the robot’s end-effector or wrist to guide the robot toward an object of interest; however, because this approach needs to update object locations continuously until the end-effector acquires the target object, the computational cost for three- dimensional (3D) object pose estimation, path finding, and motion planning tends to be high. Other researchers have mounted a camera on a fixed position at the robot base or shoulder (Chung et al., 2013; Corke, 1996). This approach has the advantage of finding a path and grasping plan, even when the object is occluded from the starting location or folding position (Srinivasa et al., 2010); however, it requires knowledge of the target object, as well as its surroundings in advance to localize the target object and plan a trajectory (Corke, 1996). Chung and colleagues evaluated an assistive robotic manipulator that operated with a high resolution webcam, mounted on the robot shoulder (Chung et al., 2013). They measured the task completion time and the success rate for a drinking task, which consisted of various subtasks, including: picking up the drink from a start location, and conveying the drink to the proximity of the user’s mouth. The average task completion time for picking up a soda can on the table was 12.55 (±2.72) seconds including the average object detection time of 0.45 (±012) seconds. The success rate of the pick-up task was 70.1% (44/62).

Other researchers use a combination of these two approaches to provide a more reliable and robust control (Srinivasa et al., 2010; Tsui et al., 2011). Tsui et al. developed a vision-based autonomous system for a wheelchair-mounted robotic manipulator using two stereo cameras, with one mounted over the shoulder on a fixed post and one mounted on the gripper. When the user indicated the object of interest by pointing to the object on a touch screen, the autonomous control automatically completed the rest of the task by reaching toward the object, grasping , and bringing it back to the user (Tsui et al., 2011). However, the combined approach can significantly increase the implementation cost and system overheads caused by complex image processing. The adoption of a 3D depth- sensing camera could be a viable solution to this, as it could significantly reduce the computational cost for 3D object pose estimation in comparison to conventional approaches that require several images of the object in various poses (i.e., front side, backside, and all possible 3D rotations). Furthermore, a 3D depth-sensing camera is less dependent on ambient lighting conditions in comparison to conventional image processing, which requires images of an object under different lighting conditions/sources in order to improve the algorithm invariance to diverse lighting conditions.

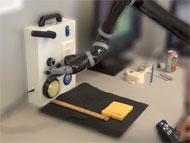

In this study, investigators developed a 3D vision-based semi-autonomous assistive robot arm control method (AROMA-V) that enables individuals with impaired motor control to more efficiently operate ARMs. A working prototype AROMA-V was implemented based on one of the most popular assistive robotic manipulators, the JACO manufactured by Kinova Technology (Montreal, QC, Canada). JACO was combined with a low-cost, short-range 3D depth-sensing camera (Senz3D manufactured by Creative Labs, Inc., Milpitas, CA) mounted on the robot base, as shown in Figure 1.

METHODS

Algorithm Development

To perform actual robotic manipulation tasks, a user starts operating the JACO using an available manual control method (e.g., joystick, touch pad, or voice recognition). During the operation, when detecting objects within a set range, AROMA-V automatically stops the robotic arm, and provides the user with possible manipulation options (e.g., “a light switch is detected. What do you want me to do? You can say switch on or switch off or do nothing”) through audible text output, based on the identified object characteristics. Then it waits until the user selects one by saying a voice command. Once the user feedback is provided, the AROMA-V drives the robotic arm autonomously until the given command is completed. Using voice recognition for controlling the AROMA-V is because it can not only provide completely hands-free operation, but also helps a user to maintain a better working posture and allows him or her to work in postures that otherwise would not be effective for operating an assistive robotic manipulator (i.e., reclined in a chair or bed).

We conducted a small-scale empirical evaluation to determine the efficacy and user satisfaction level toward AROMA-V.

Hypotheses

The following 2 hypotheses were tested:

- Performance time for robotic manipulation tasks would be different between the AROMA-V and the manual control method;

- Users' subjective workload would be different between the AROMA-V and the manual control method.

Subjects

Five able-bodied subjects (4 males and 1 female; age range of 22-28) participated in the experiments. The inclusion criteria were: (1) Participants are over 18 years of age; (2) participants have good speech to operate a voice control; (3) participants have normal vision to perform the manipulation tasks. Written informed consents from all participants were obtained in accordance with the Institutional Review Board at the University of Pittsburgh.

Procedures

Data Analysis

RESULTS

Task Completion Time

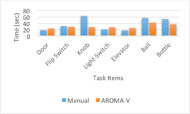

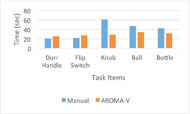

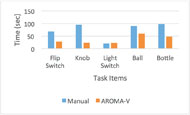

The task completion times for different manipulation tasks are plotted in Figure 3.

Perceived Workload

The user perceived workload for different control methods are reported in table 2.

| Workload | Manual Control | AROMA-V | Sig. |

|---|---|---|---|

| Mental Demand | 10.0 (±4.9) | 7.6 (±5.8) | .5 |

| Physical Demand | 2.6 (±1.7) | 2.0 (±1.7) | .5 |

| Temporal Demand | 5.6 (±2.9) | 3.6 (±3.7) | .5 |

| Performance | 4.2 (±1.3) | 4.4 (±3.9) | .99 |

| Effort | 6.6 (±1.1) | 5.4 (±4.8) | .69 |

| Frustration | 2.8 (±1.8) | 3.6 (±3.2) | .5 |

As shown in Table 2, for all six scales, no significant difference on perceived workload between the two control methods was detected across all manipulation tasks.

DISCUSSION

The results above show that AROMA-V has the potential to enable users who have difficulty in using a conventional control interface for operating an assistive robotic manipulator. In terms of task completion time, for the relatively simple tasks (e.g., door handle, light switch, and elevator switch) that do not require switching between different command modes (translational, rotational, and finger mode), the AROMA-V was lower than the manual control. But, for the relatively complex tasks (e.g., knob-turning, ball-picking, and bottle-gasping) which require fine motion control imposing frequent command mode change, the AROMA-V showed significantly faster performance than the manual control. This suggests that the AROMA-V can be used as an alternative control method to enable individuals with impaired motor control to more efficiently operate the ARMs by facilitating their fine motion control. Furthermore, since the AROMA-V was developed under a Windows operating system, it can not only be easier to integrate new and existing alternative input devices without developing additional driver software, but also increase the likelihood of adoption by users and clinical professionals.

However, data from this research still have several limitations. First the given tasks did not directly represent real situations that people encounter in their day-to-day object manipulation. Actually, when developing the current algorithm, we assumed that there is no obstacle between the manipulator and the target object. In addition, the objects used in the experiments were simple shaped and had smooth surfaces. Second, data collection was performed with unfamiliar equipment in unfamiliar environments where there performance was being observed by the investigators. Third, variability in performance might be affected by fatigue or boredom due to the simple and repetitive nature of the tasks. Most of all, because findings from this study were drawn from a small number of able-bodied participants, it is necessary to collect data from the users who have physical disabilities

REFERENCES

Corke, P. I. (1996). Visual Control of Robots: high-performance visual servoing: Research Studies Press Taunton, UK.

Driessen, B., Kate, T. T., Liefhebber, F., Versluis, A., & van Woerden, J. (2005). Collaborative control of the manus manipulator. Universal Access in the Information Society, 4(2), 165-173.

Hart, S. G. (2006). NASA-task load index (NASA-TLX); 20 years later. Paper presented at the Proceedings of the Human Factors and Ergonomics Society Annual Meeting.

Jiang, H., Zhang, T., & Wachs, J. P. (2014). Autonomous Performance of Multistep Activities with a Wheelchair Mounted Robotic Manipulator Using Body Dependent Positioning.

Kim, D.-J., Lovelett, R., & Behal, A. (2009). Eye-in-hand stereo visual servoing of an assistive robot arm in unstructured environments. Paper presented at the Robotics and Automation, 2009. ICRA'09. IEEE International Conference on.

Laffont, I., Biard, N., Chalubert, G., Delahoche, L., Marhic, B., Boyer, F. C., & Leroux, C. (2009). Evaluation of a graphic interface to control a robotic grasping arm: a multicenter study. Archives of Physical Medicine and Rehabilitation, 90(10), 1740-1748.

Maheu, V., Frappier, J., Archambault, P., & Routhier, F. (2011). Evaluation of the JACO robotic arm: Clinico-economic study for powered wheelchair users with upper-extremity disabilities. Paper presented at the Rehabilitation Robotics (ICORR), 2011 IEEE International Conference on.

Srinivasa, S. S., Ferguson, D., Helfrich, C. J., Berenson, D., Collet, A., Diankov, R., . . . Weghe, M. V. (2010). HERB: a home exploring robotic butler. Autonomous Robots, 28(1), 5-20.

Tanaka, H., Sumi, Y., & Matsumoto, Y. (2010). Assistive robotic arm autonomously bringing a cup to the mouth by face recognition. Paper presented at the Advanced Robotics and its Social Impacts (ARSO), 2010 IEEE Workshop on.

Tijsma, H. A., Liefhebber, F., & Herder, J. (2005). Evaluation of new user interface features for the manus robot arm. Paper presented at the Rehabilitation Robotics, 2005. ICORR 2005. 9th International Conference on.

Tsui, K. M., Kim, D.-J., Behal, A., Kontak, D., & Yanco, H. A. (2011). “I want that”: Human-in-the-loop control of a wheelchair-mounted robotic arm. Applied Bionics and Biomechanics, 8(1), 127-147.

Acknowledgements

This work is supported by Craig H. Neilsen Foundation and with resources and use of facilities at the Human Engineering Research Laboratories (HERL), VA Pittsburgh Healthcare System. This material does not represent the views of the Department of Veterans Affairs or the United States Government.