Madeline Blankenship, Erin Radcliffe, Cathy Bodine, Ph.D., CCC-SLP

University of Colorado Denver | Anschutz Medical Campus, Aurora, CO 80045

INTRODUCTION

Cerebral palsy (CP) is the most common pediatric neurodevelopmental disability, affecting 1 in 500 live births in the US and nearly 17 million people globally [1]–[4]. Other pediatric neuromotor disorders include muscular dystrophy and spina bifida, at 19.8 to 25.1 per 100,000 people worldwide [5] and 1 per 2,758 births in the US per year [6], respectively. Traumatic brain injury (TBI) and childhood stroke are other common factors leading to neuromotor rehabilitation [7]. Many of these patients experience upper limb (UL) impairments, such as reduced muscle strength, movement speed, dexterity, and coordination [7]. Due to the requirements of activities of daily living (ADLs), UL impairment is considered the main factor contributing to decreased activity and participation [8], [9].

Socially assistive robots (SARs) represent the intersection between socially interactive robots (SIRs) that engage purely in social interaction and assistive robots (ARs) that compensate for missing function [10]. As such, SARs attempt to create close and affective human-robot interactions designed to motivate, train, supervise, educate, or facilitate the rehabilitation process [11], [12]. While most of the work conducted around SARs involves children with autism, the elderly with dementia, and adults with stroke, recent literature has pointed towards the use of SARs to engage children with neuromotor dysfunction in rehabilitation programs [11], [13].

Concurrent work by the authors investigated the needs of end-users and stakeholders when designing a SAR for the UL rehabilitation of children with neuromotor dysfunction (publication pending). The resultant use case encapsulates traditionally recommended occupational therapy (OT) goals of ipsilateral (forward) reach, grasp, and supination/pronation of the forearm; each are functional movements required in ADLs. To address standard therapy goals, the child-robot interaction is focused on the timing and coordination of reaching movements and hand forces during grasp and release [14].

Therefore, our goal was to develop a first iteration, minimally viable SAR in an 8-week design sprint that meets the technical and user requirements of the UL rehabilitation use case for children with neuromotor dysfunction created in collaboration with pediatric therapists.

DESIGN AND PROTOTYPING

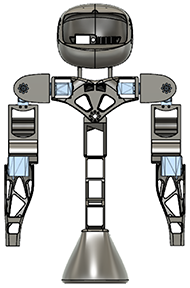

Design specifications were determined using a matrix, seen in Table 1, detailing the relationship between user needs and technical requirements determined from literature and expert testimony. We performed a stepwise iterative design method to produce a prototype inspired by the open-source Poppy Project [15] and "Reachy" robot [16], as well as the previous Center for Inclusive Design and Engineering (CIDE) lab-developed robot, "WABBS" [17].

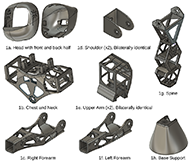

The SAR mechanical design consists of nine distinct components that either dynamically interface with MG995 servo motors or statically mount to one another. Each designed component was created using a computer-aided design (CAD) program, Autodesk Fusion360, and can be 3D printed within six to twenty-eight hours, depending on the performance level of the available 3D printer, chosen filament material properties, and set print parameters.

The SAR head (fig. 1a) is comprised of two components, a front (face) half and a back (neck-mounting) half. The head components of the SAR are modified Poppy Project open-source CAD files [15]. Unnecessary artifacts, such as internal servo motor and rotational neck mounting components, were removed from our design adaptation. Face screen mounting components were maintained to allow for the installation of a Raspberry Pi Manga Screen used for the SAR's dynamic facial expressions. Static neck mounting components, designed to interface with a custom-designed neck and chest component, were added to the base of the modified head CAD designs.

The SAR chest (fig. 1b) incorporates an angled neck that statically mounts to the SAR head. Based on the Poppy Project's open-source chest concept [15], our chest was designed to enable an MG995 servo motor to statically mount within each lateral cavity of the printed chest. Each of the two servo motor's horns dynamically mount to a separate shoulder component on the right and left side, to enable bilateral shoulder flexion and extension. To minimize unnecessary complexity, the SAR shoulders (fig. 1d) were designed to be bilaterally identical such that one CAD design can be printed twice to provide both a right and left motor-mounting interface enabling two degrees of freedom: flexion/extension and abduction/adduction. The motor interface between the chest (fig. 1b) and the shoulder (fig. 1d) enables flexion/extension. The motor interface between the shoulder (fig. 1d) and the upper arm (fig. 1e) enables abduction/adduction.

The upper arm (fig. 1e) statically mounts to MG995 servo motors on both the proximal and distal sides to enable both shoulder abduction/adduction and forearm flexion/extension, respectively. To minimize unnecessary complexity, the upper arm was also designed to be bilaterally identical, such that one design can be printed twice to provide both a right and left upper arm. A distal MG995 servo motor statically mounts to each upper arm component and dynamically mounts to each forearm component (fig. 1c and 1f). Each SAR forearm was uniquely designed for the right and left sides to maximize flexion/extension range of motion in each anatomical elbow joint at various orientations.

To optimize stability and structural integrity, the SAR spine (fig. 1g) was designed based on right-angle bracketing and crane design motifs commonly applied in the construction and architecture industries. The SAR base support (fig. 1h) is a modified Poppy Project open-source CAD file [15]. As with the SAR head, unnecessary artifacts, such as supplementary mounting structures and a large internal handle, were removed in our base support design adaptation.The SAR skeletal structure can be assembled in approximately two hours by unskilled builders following the internally developed instruction manual. The MG995 servo motors are hardwired to a PWM servo driver (Adafruit PCA9685 16-Channel Servo Driver) and controlled by the Arduino hobbyist robotic platform (Arduino Uno Rev3). The PWM servo driver enables multiple servos to run simultaneously without overburdening the Arduino's processing overhead, and it mitigates the challenges of power consumption and heat generation. Sensors, including cameras for visual recognition, microphones for sound recognition, and pressure sensors for touch activation were embedded in the skeletal structure. Using a Wizard of Oz [23] prototyping model, the Arduino Uno recognizes the conditions identified by this network of sensors and responds to given motor commands of the teleoperator.

To protect both the SARs skeletal structure and the child, the SAR was covered in Sunmate foam that meets appropriate ASTM F963-17 small parts testing standards for stuffing materials in toys for children [18]. The material properties of the foam are also consistent with the lab's pediatric OT's recommendation for stiffness and pliability, based on the predicted grasp strength of children with neuromotor dysfunction Finally, to increase the playfulness of the SAR design, the skeletal structure and foam are covered in an easy-to-remove and easy-to-clean stuffed animal covering and outfit based on the preferences of the individual child.

EVALUATION AND RESULTS

The final minimally viable prototype was evaluated using the design specification matrix below detailing the relationship between user needs and technical requirements determined from literature and expert testimony. Literature supports the use of the NAO robotic platform as an industry-standard [19]. Therefore, NAO technical specifications were used as the main point of comparison.

| # | Design Specification | # | V&V Test | P/F |

| 1 | Lightweight | 1 | Total weight less than or equal to 5.48 Kg or 12.08 Lb. | P |

| 2 | Low cost | 2 | Total cost less than $8,000 | P |

| 3 | Structurally stable | 3 | Maintain vertical orientation during use case | P |

| 4 | Structurally durable | 4 | Drop test from standard table height (28 inches) | P |

| 5 | Easily cleanable | 5 | Outer covering can be removed and washed in a commercial washer | P |

| 6 | 3DOF per arm | 6 | Demonstrate shoulder flexion and extension | P |

| 7 | Demonstrate shoulder abduction and adduction | P | ||

| 8 | Demonstrate elbow flexion and extension | P | ||

| 7 | Consistent performance of use case | 9 | Demonstrate use case 10 times without error | P |

| 8 | Sound within acceptable noise ranges to not scare children with CP | 10 | Motor noise below 60 dB (normal speech) | P |

| 9 | Easily reproducible | 11 | Total fabrication and assembly time less than 1 standard work week (5 days, 120 hours) by untrained builders | P |

DISCUSSION

Results from preliminary verification and validation (V&V) testing confirm that the minimally viable SAR prototype, developed in 8 weeks, meets the NAO platform-defined functional requirements outlined above. Future V&V testing will assure that the SAR prototype meets the toy and assistive technology standards for children, including ASTM F963-17 [21], ISO 13482 [20], and ISO 12182 [21]. Upcoming V&V testing will also measure the SAR's participation and engagement by evaluating the prototype's camera and facial recognition ability, microphone and sound recognition ability, and pressure sensor activation. Informed by preliminary V&V testing results, future design iterations will improve the SAR's fabrication efficiency and reproducibility by simplifying CAD component designs and hardware assembly requirements.

To assess the SAR prototype's therapeutic efficacy and to quantify engagement, we will complete a Wizard of Oz feasibility study using a standardized experimental model in the field of human-robot interaction (HRI) [22], [23]. A successive design sprint will incorporate capabilities for refined interactions. Future work aims to also quantify child behavior and movement quality in response to SAR intervention using deep learning and machine learning-driven markerless tracking tools combined with computational human movement kinematics and emotional recognition modeling and analysis methods [24], [25].

CONCLUSION

We demonstrated that the minimally viable SAR can effectively complete the UL rehabilitation use case for children with neuromotor dysfunction. However, the results of the prototype evaluation revealed several design improvements that will be implemented in future iterations. The current prototype is undergoing user testing with clinical experts, typically developing children, and children with neuromotor dysfunction. Results are forthcoming.

REFERENCES

[1] V. Schiariti et al., "Implementation of the International Classification of Functioning, Disability, and Health (ICF) Core Sets for Children and Youth with Cerebral Palsy: Global Initiatives Promoting Optimal Functioning," Int. J. Environ. Res. Public. Health, vol. 15, no. 9, 01 2018, doi: 10.3390/ijerph15091899.

[2] M. Simms, "24 - Intellectual and Developmental Disability," in Nelson Pediatric Symptom-Based Diagnosis, R. M. Kliegman, P. S. Lye, B. J. Bordini, H. Toth, and D. Basel, Eds. Elsevier, 2018, pp. 367-392.e2. doi: 10.1016/B978-0-323-39956-2.00024-8.

[3] M. Stavsky, O. Mor, S. A. Mastrolia, S. Greenbaum, N. G. Than, and O. Erez, "Cerebral Palsy—Trends in Epidemiology and Recent Development in Prenatal Mechanisms of Disease, Treatment, and Prevention," Front. Pediatr., vol. 5, Feb. 2017, doi: 10.3389/fped.2017.00021.

[4] B. Steenbergen and A. M. Gordon, "Activity limitation in hemiplegic cerebral palsy: evidence for disorders in motor planning," Dev. Med. Child Neurol., vol. 48, no. 9, pp. 780–783, Sep. 2006, doi: 10.1017/S0012162206001666.

[5] A. Theadom et al., "Prevalence of Muscular Dystrophies: A Systematic Literature Review," Neuroepidemiology, vol. 43, no. 3–4, pp. 259–268, 2014, doi: 10.1159/000369343.

[6] CDC, "Spina Bifida Data and Statistics | CDC," Centers for Disease Control and Prevention, Aug. 31, 2020. https://www.cdc.gov/ncbddd/spinabifida/data.html (accessed Feb. 11, 2022).

[7] C. N. Gerber, B. Kunz, and H. J. A. van Hedel, "Preparing a neuropediatric upper limb exergame rehabilitation system for home-use: a feasibility study," J. NeuroEngineering Rehabil., vol. 13, no. 1, p. 33, Mar. 2016, doi: 10.1186/s12984-016-0141-x.

[8] B. V. Zelst, M. D. Miller, R. N. Russo, S. Murchland, and M. Crotty, "Activities of daily living in children with hemiplegic cerebral palsy: a cross-sectional evaluation using the Assessment of Motor and Process Skills," Dev. Med. Child Neurol., vol. 48, no. 9, pp. 723–727, Sep. 2006, doi: 10.1017/S0012162206001551.

[9] H.-C. Chiu and L. Ada, "Constraint-induced movement therapy improves upper limb activity and participation in hemiplegic cerebral palsy: a systematic review," J. Physiother., vol. 62, no. 3, pp. 130–137, Jul. 2016, doi: 10.1016/j.jphys.2016.05.013.

[10] N. A. Malik, F. A. Hanapiah, R. A. A. Rahman, and H. Yussof, "Emergence of Socially Assistive Robotics in Rehabilitation for Children with Cerebral Palsy: A Review," Int. J. Adv. Robot. Syst., vol. 13, no. 3, p. 135, May 2016, doi: 10.5772/64163.

[11] M. M. Blankenship and C. Bodine, "Socially Assistive Robots for Children With Cerebral Palsy: A Meta-Analysis," IEEE Trans. Med. Robot. Bionics, vol. 3, no. 1, pp. 21–30, Feb. 2021, doi: 10.1109/TMRB.2020.3038117.

[12] J. A. Buitrago, A. M. Bolaños, and E. C. Bravo, "A motor learning therapeutic intervention for a child with cerebral palsy through a social assistive robot," Disabil. Rehabil. Assist. Technol., vol. 0, no. 0, pp. 1–6, Feb. 2019, doi: 10.1080/17483107.2019.1578999.

[13] K. Winkle, P. Caleb-Solly, A. Turton, and P. Bremner, "Mutual Shaping in the Design of Socially Assistive Robots: A Case Study on Social Robots for Therapy," Int. J. Soc. Robot., vol. 12, no. 4, pp. 847–866, Aug. 2020, doi: 10.1007/s12369-019-00536-9.

[14] E. E. Butler, A. L. Ladd, S. A. Louie, L. E. LaMont, W. Wong, and J. Rose, "Three-dimensional kinematics of the upper limb during a Reach and Grasp Cycle for children," Gait Posture, vol. 32, no. 1, pp. 72–77, May 2010, doi: 10.1016/j.gaitpost.2010.03.011.

[15] "Poppy Project - Open source robotic platform." https://poppy-project.org/en/ (accessed Nov. 17, 2021).

[16] S. Mick et al., "Reachy, a 3D-Printed Human-Like Robotic Arm as a Testbed for Human-Robot Control Strategies," Front. Neurorobotics, vol. 13, p. 65, 2019, doi: 10.3389/fnbot.2019.00065.

[17] C. Clark, "Intelligent Clinical Augmentation: Developing a Novel Socially Assistive Robot for Children with Cerebral Palsy," University of Colorado Denver | Anschutz Medical Campus, 2020.

[18] "Toy Safety Business Guidance & Small Entity Compliance Guide," U.S. Consumer Product Safety Commission. https://www.cpsc.gov/Business--Manufacturing/Business-Education/Toy-Safety-Business-Guidance-and-Small-Entity-Compliance-Guide (accessed Dec. 07, 2021).

[19] "NAO the humanoid and programmable robot | SoftBank Robotics." https://www.softbankrobotics.com/emea/en/nao (accessed Mar. 09, 2020).

[20] "ISO 13482:2014(en), Robots and robotic devices — Safety requirements for personal care robots." https://www.iso.org/obp/ui/#iso:std:iso:13482:ed-1:v1:en (accessed Jan. 03, 2022).

[21] "ISO/IEC TR 12182:2015(en), Systems and software engineering — Framework for categorization of IT systems and software, and guide for applying it." https://www.iso.org/obp/ui/#iso:std:iso-iec:tr:12182:ed-2:v1:en (accessed Jan. 03, 2022).

[22] A. Steinfeld, O. C. Jenkins, and B. Scassellati, "The oz of wizard: simulating the human for interaction research," in Proceedings of the 4th ACM/IEEE international conference on Human robot interaction - HRI '09, La Jolla, California, USA, 2009, p. 101. doi: 10.1145/1514095.1514115.

[23] F. Rietz, A. Sutherland, S. Bensch, S. Wermter, and T. Hellström, "WoZ4U: An Open-Source Wizard-of-Oz Interface for Easy, Efficient and Robust HRI Experiments," Front. Robot. AI, vol. 8, p. 213, 2021, doi: 10.3389/frobt.2021.668057.

[24] A. Mathis et al., "DeepLabCut: markerless pose estimation of user-defined body parts with deep learning," Nat. Neurosci., vol. 21, no. 9, pp. 1281–1289, Sep. 2018, doi: 10.1038/s41593-018-0209-y.

[25] S. B. Hausmann, A. M. Vargas, A. Mathis, and M. W. Mathis, "Measuring and modeling the motor system with machine learning," Curr. Opin. Neurobiol., vol. 70, pp. 11–23, Oct. 2021, doi: 10.1016/j.conb.2021.04.004.