H.A.T. – A Camera-Based Finger Range-of-Motion Hand Assessment Tool to Enhance Therapy Practices

Yuka Fan, Sasha Portnova, Emily Boeschoten

Problem Statement and Background

Hand function assessment is crucial for rehabilitation following injury, surgery, or conditions affecting dexterity (e.g., stroke, arthritis). One way to measure hand function is by assessing range of motion (ROM) in each joint, traditionally done with the use of manual goniometers (Fig. 1). Though common in hand clinics, they are time-consuming, require training, and have low inter-rater reliability. Furthermore, they create barriers to career entry in occupational therapy for individuals with low finger dexterity, who may struggle to perform the required assessments themselves. Measuring improvements in hand function is not only important for clinical decision-making but for incentivizing continued financial support for treatment. Insurance companies often require objective assessment results to approve ongoing therapy coverage. These factors underscore the growing need for a more accessible, efficient, and reliable tool to quantify hand movement in both clinical and home settings, including for virtual telerehabilitation visits. In recent years, several solutions have emerged that attempt to improve the accuracy, efficiency, and accessibility of joint ROM measurement:

Digital Goniometers

Digital goniometers, like those from Biometrics [1], aim to automate the ROM measurement process. These devices provide precise measurements, simplifying data collection compared to manual goniometers. However, while these solutions offer more accuracy and ease of use, they are still time-consuming, costly, and limited in terms of remote or telerehabilitation applications.

Smartphone-Based Tracking

A recent study [2] investigated the use of smartphones as goniometers. It found that smartphones can offer convenient and reliable ROM measurements, eliminating the need for physical tools. Another study examined the use of smartphone photographs to estimate finger ROM [3]. This approach leverages basic photo-based technology to estimate joint angles. Additionally, some efforts exist in the space of smartphone-based tracking in the form of an actual application. Curovate [4] is an app that uses a smartphone camera to measure lower limb ROM via videography. In addition, while convenient, smartphone-based solutions still face challenges in accuracy, reliability, and time consumption.

Data Visualization Solutions

Limited solutions exist in the space of result visualization of hand function assessments. A commonly used Raintree Software [5] is an electronic health records system that allows ROM data storage for occupational therapy sessions. However, while it allows for the storage and management of ROM data, it lacks visualization tools like graphs for a clear, interpretable display of progress over time. This highlights the gap in the market for solutions that not only collect but also present data in ways that enhance clinical decision-making.

The Hand Assessment Tool (H.A.T.) aims to address these gaps by utilizing camera-based hand-tracking technology to provide an objective, non-invasive, and real-time measurement of finger joint ROM. H.A.T. is designed to overcome the limitations of traditional tools by offering an accessible and efficient alternative that can be used both in-clinic and remotely for virtual visits. By combining motion-tracking technology with a user-friendly interface, H.A.T. provides clinicians with the tools they need to more effectively monitor patient progress, and patients can benefit from more personalized and interactive rehabilitation.

Methods

Hardware Setup

We used an Ultraleap hand-tracking device (Ultraleap, San Francisco, CA, USA) (Fig. 2), which captures finger ROM with dual-infrared cameras and outputs joint angles (Fig. 3) for clinical assessment [6]. The camera operates within a 10-110cm range and a 160° field of view, enabling natural hand interaction. In the past, Ultraleap hand-tracking tools have been proposed as a part of hand rehabilitation protocols as exergames [7-11] and shown to increase patient engagement and motivation during rehabilitation sessions. Past research confirms its accuracy across users with and without disabilities [12]. The suggested hardware add-on includes an ergonomic arm rest (Fig. 4) arm rest that improves measurement stability and supports patients with limited arm strength.

H.A.T. was developed in Unity (Unity Technologies, San Francisco, CA, USA), chosen for its seamless integration with Ultraleap and cross-platform compatibility, such as Windows, iOS, and Android. It provides a simple and flexible interface-building environment, making it easy to design intuitive and interactive rehabilitation tools, such as H.A.T. Additionally, our prior experience with the programming engine enables efficient development and rapid iteration.

User Testing

We conducted four rounds of user testing to refine H.A.T.:

- Low-Fidelity Prototyping: We used LEGO blocks to explore how 5 users interacted with the Ultraleap device and assess the clarity of task instructions. This study led to several improvements, such as using static images for hand task instructions as they proved to be more effective than videos and refining instruction wording for clarity, including separating guidance on hand orientation and pose.

- High-Fidelity Prototyping: A series of interactive prototypes that we developed in Unity were tested with 8 users in two rounds to refine the UI design, features, user flow, and instructional clarity. Several key improvements were made: removing pre-checked selections in the "Select Tasks" menu for a cleaner and more intuitive experience, reducing the text and increasing the font size for better readability, and adjusting the Ultraleap device placement to ensure it did not block users' view of their hands during task execution. In addition, future interface improvements were identified such as mirroring the virtual hand in the interface, adding success messages after tasks, and implementing additional audio cues when the hand is not detected by the Ultraleap device.

- Final Co-Design Testing: In our final round, we worked with the co-designers with direct hand therapy expertise (either from clinician’s or patient’s side) Their feedback led to key refinements, including: CSV export for data sharing, wrist joint tracking integration, hand joint map for intuitive visualization, arm rest support to assist patients with limited upper-extremity mobility

Measurement Stability Assessment

The reliability of the hand tracking of the H.A.T. interface was evaluated by performing full-range finger ROM assessment with three individuals for five times. The stability of the Ultraleap tool was measured in the form of standard deviation for each joint ROM and averaged across all joints for each individual. The average variability was 3.0 degrees per joint, confirming tool reliability.

Description of Final Approach and Design

H.A.T. integrates dual-camera inputs from the Ultraleap device with advanced hand-tracking algorithms to measure finger ROM without the need for manual goniometers. The system employs a real-time video processing to identify hand joint ROMs, a user-friendly interface that provides visual feedback and reports for therapists and patients, and an easy-to-use out-of-the-box system without the need of a complex setup.

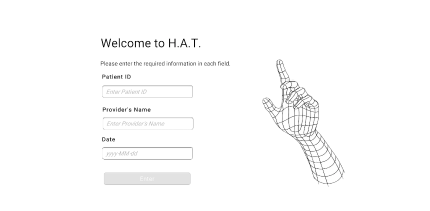

The H.A.T. interface begins with a login page (Fig. 5) where clinicians can enter the patient ID, provider's name, and date, ensuring accurate data matching.

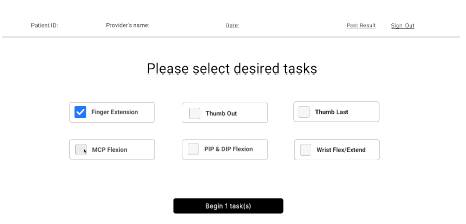

Next, users select from six hand tasks (Fig. 6), each designed to track different hand joints. Clinicians can focus on specific joints or opt for full-range tracking, which captures flexion/extension of 15 hand joints, including: thumb (MCP & IP), fingers (MCP, PIP, & DIP), and wrist joint.

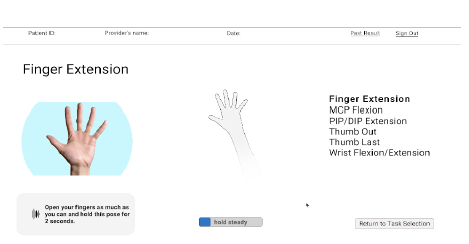

After task selection, patients are guided step-by-step through each task with audio, visual, and text instructions (Fig. 7), accommodating diverse user needs. The system ensures accurate data collection by detecting hand presence before starting the 2-second recording window and only counting time when the hand is fully visible by the Ultraleap device. The timer resets if the hand goes out of the visibility range of the device.

Patients are then prompted to either proceed to the next task or re-record if needed. We implemented a 5-second automatic proceeding to the next task to ensure smooth transitions, reducing unnecessary interactions for users with limited mobility. Once all tasks are completed, the results page (Fig. 8) presents past data in the form of line graphs. Users can 1) select different fingers or the wrist, 2) navigate between joints using arrow buttons, 3) view an interactive hand map, highlighting the joint that is currently displayed for clear clinician-patient communication, and 4) choose the date range for displaying the data. Finally, the results page includes options to 1) export data as a CSV file for easy sharing, 2) return to task selection, and 3) sign out.

Outcome

The final prototype (Fig. 9) successfully captures and quantifies finger ROM, streamlining the process of hand function assessment by guiding patients through a series of hand tasks to record necessary data. Data visualization enhances result delivery, with which both patient and clinician can track progress and evaluate the effectiveness of current therapy practices. Preliminary validation tests indicate stable measures across sessions (average of 3.0 degrees across 15 joints) using the Ultraleap hand-tracking platform. The full-range joint assessment takes on average 90 seconds, which significantly reduces the amount of time currently taken by clinicians to measure ROM with manual goniometers. During the co-designers’ interface assessment, the team has rated the H.A.T. interface as 3/4, 3.5/4, 3.5/4, and 3.5/4 in terms of accuracy confidence, ease of use, clarity of instructions, and visual appeal, respectively, suggesting the significant potential for a seamless integration into everyday clinical practice.

Cost

Hardware: Leap Motion Controller 2 ($219.00)

Note: the system can work with older models, such as Ultraleap 3Di ($275.00), Stereo IR170 ($250.00), and Leap Motion Controller ($199.99). We are also currently working on making the system work with any existing webcam ($30-200).

Suggested Hardware: Ergonomic arm rest ($34.99)

Note: this is a suggested hardware to include during the hand function assessment to ensure the stability of the hand in the camera frame, improve measure repeatability, and provide support for patients with limited arm strength.

Software: Free

Note: the H.A.T. interface will be released open-source for any clinic to utilize in their practice.

Total Estimated Cost: $30-275

Future Work

Our future developments aim to enhance the usability and accessibility of the H.A.T. interface by expanding its capabilities and improving clinical integration. Planned improvements include:

Bilateral Hand Tracking

Incorporating tracking for both the right and left hands, allowing users to select which hand is being tracked.

Traditional Webcam-Based Tracking

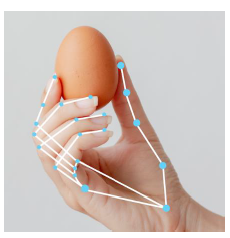

Developing a webcam-based hand-tracking option to eliminate the need for an Ultraleap device, increasing accessibility and affordability. The webcam integration under consideration, the Google MediaPipe Hand model (Fig. 10), will also rely on the computer vision technology utilized by the Ultraleap device. In the past, a study explored the potential of this model to estimate joint ROM [13] and has shown MediaPipe’s potential for integration into rehabilitation tools.

Pre- and Post-Therapy ROM Tracking

Introducing an option to record range of motion (ROM) before and after therapy sessions, with a dedicated results visualization for same-day progress comparison.

Voiced-Based Commands

Incorporating a voice-based commands for a more accessible and intuitive experience, which will allow patients to navigate through tasks using simple voice prompts, eliminating the need to press buttons on the screen. Patients will be able to continue to the next task or re-record their current hand movement with just their voice, reducing unnecessary hand movements and making the assessment process smoother.

Additionally, we will engage clinicians in a show-and-tell session to gather feedback on the interface's usability and potential improvements. Future work includes 1) evaluating the stability of hand-tracking using both Ultraleap and webcam-based methods across a diverse user population, 2) comparing stability of H.A.T. measurements with traditional goniometers as well as 3) validating their accuracy with marker-based motion capture. These advancements will help refine H.A.T. into a more versatile, clinically validated, and widely adoptable tool for hand therapy assessment.

Significance

H.A.T. offers a novel, non-invasive, and scalable solution for hand assessment, enhancing rehabilitation practices with reliable motion tracking. Its implementation can improve therapy personalization, leading to better functional outcomes for individuals with hand impairments. Additionally, by eliminating the need for hand-held tools in clinical assessments, H.A.T. reduces barriers to career entry into occupational therapy for individuals with limited hand function. The interface also enables remote monitoring, laying the foundation for telerehabilitation and reducing the need for frequent in-person visits. By bridging the gap between traditional assessment methods and modern technology, H.A.T. represents a significant advancement in hand therapy.

Acknowledgements

Our team sincerely thanks the Department of Rehabilitation Medicine for their invaluable support and insightful feedback throughout the development of this tool. We are especially grateful for their enthusiasm and openness to innovations in hand therapy. Additionally, we extend our appreciation to HCDE 598: Designing for XR course for their active participation in user testing, which significantly contributed to refining and improving the interface. Lastly, we would like to thank the Hands for Living clinic in Redmond, WA for letting us do observations to better understand the challenges with current hand assessments in clinics.

References

[1] Medical Digital Goniometers for Physical Therapy | E-LINK. (n.d.). E-LINK. https://biometricselink.com/medical-digital-goniometers/

[2] Theile, H., Walsh, S., Scougall, P., Ryan, D., & Chopra, S. (2022). Smartphone goniometer application for reliable and convenient measurement of finger range of motion: a comparative study. Australasian Journal of Plastic Surgery, 5(2), 37–43. https://doi.org/10.34239/ajops.v5n2.335

[3] Zhao, J. Z., Blazar, P. E., Mora, A. N., & Earp, B. E. (2019). Range of motion measurements of the fingers via smartphone photography. Hand, 15(5), 679–685. https://doi.org/10.1177/1558944718820955

[4] Therapist, E. R.-. P. (2024, May 12). How to measure range of motion using Curovate | Curovate. Curovate Blog | Physical Therapy App. https://curovate.com/blog/how-to-measure-your-range-of-motion/

[5] Raintree Systems: All-in-One Occupational Therapy EMR. (2024, August 20). Raintree Systems. https://www.raintreeinc.com/occupational-therapy/

[6] Digital worlds that feel human | Ultraleap. (n.d.). https://www.ultraleap.com/

[7] Gonçalves, R. S., De Souza, M. R. S. B., & Carbone, G. (2022). Analysis of the Leap Motion Controller’s performance in measuring wrist rehabilitation tasks using an industrial robot arm reference. Sensors, 22(13), 4880. https://doi.org/10.3390/s22134880

[8] Tarakci, E., Arman, N., Tarakci, D., & Kasapcopur, O. (2019). Leap Motion Controller–based training for upper extremity rehabilitation in children and adolescents with physical disabilities: A randomized controlled trial. Journal of Hand Therapy, 33(2), 220-228.e1. https://doi.org/10.1016/j.jht.2019.03.012

[9] Hand rehabilitation via gesture recognition using LEAP Motion controller. (2018, July 1). IEEE Conference Publication | IEEE Xplore. https://ieeexplore.ieee.org/document/8431349?utm_source=chatgpt.com

[10] Aguilera-Rubio, Á., Alguacil-Diego, I. M., Mallo-López, A., & Cuesta-Gómez, A. (2021). Use of the LEAP Motion Controller® system in the rehabilitation of the upper limb in stroke. A systematic review. Journal of Stroke and Cerebrovascular Diseases, 31(1), 106174. https://doi.org/10.1016/j.jstrokecerebrovasdis.2021.106174

[11] Akdemir, S., Tarakci, D., Budak, M., & Hajebrahimi, F. (2023). The effect of leap motion controller based exergame therapy on hand function, cognitive function and quality of life in older adults. A randomised trial. Journal of Gerontology and Geriatrics, 71(3), 152–165. https://doi.org/10.36150/2499-6564-n606

[12] Accuracy of Video-Based Hand tracking for people with Upper-Body Disabilities. (2024). IEEE Journals & Magazine | IEEE Xplore. https://ieeexplore.ieee.org/abstract/document/10526367/

[13] Létourneau, S. G., Jin, H., Peters, E., Grewal, R., Ross, D., & Symonette, C. (2024). Augmented Reality-Based Finger Joint Range of Motion Measurement: Assessment of Reliability and concurrent Validity. The Journal of Hand Surgery. https://doi.org/10.1016/j.jhsa.2024.10.006