SIMPLE ACTIVITY RECOGNITION USING SMARtPHONE TECHNOLOGIES FOR IN-HOME REHABILITation

Md Osman Gani1, Taskina Fayezeen1, Sheikh Iqbal Ahamed1,

Dennis B. Tomashek2, Roger O. Smith2

1Ubicomp Lab, Marquette University, Milwaukee, WI,

2R2D2 Center, University of Wisconsin, Milwaukee, WI

ABSTRACT

Human activity recognition is a multidisciplinary research area. It has significance in different fields such as assistive technology, rehabilitation engineering, health outcomes, artificial intelligence, and the social sciences. In this paper, we describe an approach to recognize simple full body motor activity using smartphones’ accelerometer sensor. A total of 5 subjects, all male, ages 24 to 35 years, participated in the laboratory experiment performing six different activities in an uncontrolled environment. The results of this study indicate that for stairs, running, sitting, and phone placed at table activities system is able to achieve 100% recognition accuracy. The system is also able to recognize walking activity with 93.34% accuracy. We have presented details of the conducted experiment. This research will help us to progress our work on building complex activity recognition system. It also has importance in assistive technology and in-home rehabilitation.

BACKGROUND

People perform numerous activities in our daily life [1]. These activities range from simple full body motor activities like walking, running, and sitting, to complex functional activity like reading a book, playing soccer, and cooking. Building a system to accurately identify these activities is a challenging task. Human activity recognition plays an important role in many areas, including rehabilitation engineering, assistive technology, and social sciences [2] [3] [4]. Performing activities of daily living (ADLs) and instrumental activities of daily living (IADLs) are an important part of living a healthy independent life [5][6]. Performance in these activities can be important indicators for patients recovering from newly acquired disability and patients who are at risk for decline, due to aging. Decline in ADLs and IADLS performance may also act as early indicators of disease or illness [7].

There has been a rapid growth in the number of smartphone users. Total shipment of smartphones in 2013 was one billion units [8]. Smartphones come with a number of useful sensors including accelerometers, gyroscopes, and magnetometers. The motion related sensors, such as accelerometer and gyroscope have been widely used in activity recognition systems as a wearable sensor. The aim of this project is to use acceleration captured by the smartphones’ accelerometer sensor during simple full body motor activities to recognize different activities. There has research using smartphone sensors to recognize simple activities. Most of these approaches, however lack in various factor, such as recognition accuracy, computational and memory complexity, and real-time implementation [9] [10] [11].

PURPOSE

The purpose of this study is to answer the following research questions:

- Is only one-axis acceleration from the smartphones’ accelerometer enough to recognize simple full body motor activities?

- Can we achieve a good activity recognition accuracy with this one-axis acceleration?

METHOD

Approach

Data Collection

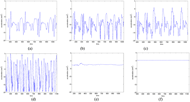

The researchers have developed a data collection tool, UbiSen (Ubicomp Lab Sensor Application for Android) to collect sensor data. It can collect data from multiple sensors simultaneously. Sensor data for five different activities has been collected: walking, walking downstairs, walking upstairs, running, and sitting. Data has also been collected with a smartphone set on a table to simulate a stationary position. The accelerometer sensor data along y-axis for these six different activities are shown in Figure 2.

Data has been collected from 5 able-died male subjects. They ranged in age from 24 to 35 years. Each of the participants performed 6 activities in an uncontrolled environment. Participants placed the smartphone in the front pocket of their trouser (smartphone front faced upside down) and performed 5 full body motor activities. Each activity was performed for 2 minutes. Therefore, in total 60 minutes of sensor data for six different activities. An Android smartphone, Nexus 5, has been used in the data collection procedure.

Data Analysis

Accelerometer sensor data along the y-axis has been used to train and test the system. Data has been partitioned into 6 different chunks. Each of these chunks contains 400-500 samples. Checks were completed to ensure that each chunk contained enough information about each activity cycle. One chunk was used for each activity to train the system for each participant. The remaining chunks were used to test the system. Cross-validation was completed to validate the accuracy of the system.

Experiment

The collected data was processed using MATLAB 2013a release. The system was implemented in the same platform. The collected activity signals were preprocessed before experiment. The processed data was used to train and test the system in MATLAB.

RESULTS

The quantitative evaluation has been done to assess the recognition accuracy of the system. The confusion matrix for all the activity is presented in Table 1. There were 5 participants, and each of the activities was divided into 6 partitions, for a total of thirty instances for each of the activities. The model parameter and signal sample size was varied to check robustness of the system. First column in each row indicates the actual activity that has been tested. The rest of the columns indicate the recognized activity. For example, in the first row, the first column indicates that the actual activity is walking. The remaining columns indicate that our of 30 activities, 28 has been correctly recognized as walking, 1 has been recognized as walking downstairs and 1 has been recognized as walking upstairs.

|

Activities |

Walking |

Downstairs |

Upstairs |

Running |

Sitting |

Phone place at table |

Walking |

28 |

1 |

1 |

0 |

0 |

0 |

Downstairs |

0 |

23 |

7 |

0 |

0 |

0 |

Upstairs |

0 |

6 |

24 |

0 |

0 |

0 |

Running |

0 |

0 |

0 |

30 |

0 |

0 |

Sitting |

0 |

0 |

0 |

0 |

30 |

0 |

Phone place at table |

0 |

0 |

0 |

0 |

0 |

30 |

DISCUSSION

We have presented a smartphone based human activity recognition system. We have used built-in accelerometer sensor to identify users’ current activity. Out of 6 activities, 100% accuracy was achieved for 3 activities (running, sitting, and phone placed at table). Walking was recognized with 93.34% accuracy. Walking downstairs, and walking upstairs showed accuracy of 76.67% and 80%, respectively. It appears the system is unable to reliably recognize these two activities. But a closer examination of Table 1indicates that all of the misclassified instances are recognized interchangeably between these two activities. When grouping these two activities as one (activity: stairs), the system was able to recognize it with 100% accuracy.

The presented system was able to classify simple activities with a very good accuracy. The system shows that only one-axis acceleration is enough to classify simple full body motor activities. It reduces the computational and memory complexity of the system. Hence the real-time classification will be much faster using this light-weight approach.

The simple activity recognition system was developed as an initial step in working toward a complex activity recognition system, which are often composed of the simple activities described in this paper. This accurately recognized simple activity data will be used as an input for the future system [13].

CONCLUSION

We have worked on indoor localization, smartphone data collection tool, and simple full body motor activity recognition to reach the ultimate goal of complex activity recognition system. We have also reported the data collection for complex activity recognition. We have also started to implement the proposed system in Android platform. It will allow us to do real-time classification of activities. The proposed system is able to classify simple activities with a very good accuracy. Hence the developed system will play a key role in the prediction of complex activity recognition.

REFERENCES

[1] Fox Dieter, Location-Based Activity Recognition, Advances in Artificial Intelligence 2007, Volume 4667/2007, 51, DOI: 10.1007/978-3-540-74565

[2] Satyanarayanan, M., "Pervasive computing: vision and challenges," Personal Communications, IEEE , vol.8, no.4, pp.10,17, Aug 2001

[3] X. Su, H. Tong, and P. Ji, “Activity recognition with smartphone sensors,” Tsinghua Sci. Technol., vol. 19, no. 3, pp. 235–249, Jun. 2014.

[4] O. D. Lara and M. a. Labrador, “A Survey on Human Activity Recognition using Wearable Sensors,” IEEE Communications. Surveys & Tutorials, vol. 15, no. 3, pp. 1192–1209, 2013.

[5] Levasseur M, Desrosiers J, St-Cyr Tribble D: Do quality of life, participation and environment of older adults differ according to level of activity? Health and Quality of Life Outcomes 2008; 6(1): 30.

[6] Molzahn A, Skevington SM, Kalfoss MSM, K: The importance of facets of quality of life to older adults: an international investigation. Quality of Life Research 2010; 19: 293-298.

[7] Bonder BR, Bello-Haas VD: Functional Performance in Older Adults, Vol. 3: F.A. Davis Company, 2009.

[8] IDC Worldwide Mobile Phone Tracker, Jan 27, 2014.

[9] X. Su, H. Tong, and P. Ji, “Activity recognition with smartphone sensors,” Tsinghua Sci. Technol., vol. 19, no. 3, pp. 235–249, Jun. 2014.

[10] L. Bao, S. S. Intille, “Activity Recognition from User-Annotated Acceleration Data,” Pervasive Computing, LNCS 3001, pp. 1-17, 2004.

[11] S. a Antos, M. V Albert, and K. P. Kording, “Hand, belt, pocket or bag: Practical activity tracking with mobile phones.,” J. Neurosci. Methods, Oct. 2013.

[12] F. Takens, “Detecting Strange Attractors in Turbulence,” Proc. Dynamical Systems and Turbulence, pp. 366-381, 1980.

[13] Md Osman Gani, S. I. Ahamed, S. A. Davis, and R. O. Smith, “An Approach to Complex Functional Activity Recognition using Smartphone Technologies", RESNA 2014 Annual Conference, June 11 -15, 2014.