Rochelle J. Mendonca![]() 1

1![]() , Laryn M. O'Donnell

, Laryn M. O'Donnell![]() 2

2![]() , & Roger O. Smith

, & Roger O. Smith![]() 3

3![]()

![]() 1

1![]() Columbia University, New York, NY, 10032

Columbia University, New York, NY, 10032

![]() 2

2![]() Rehabilitation Research Design and Disability (R2D2) Center, University of Wisconsin-Milwaukee

Rehabilitation Research Design and Disability (R2D2) Center, University of Wisconsin-Milwaukee

Milwaukee, WI, 53211

ABSTRACT

The MED-AUDIT (Medical Equipment Device – Accessibility and Universal Design Information Tool) prototype has begun to demonstrate the potentials of this measurement approach to assess the accessibility of medical devices. This study provides a preliminary investigation of the feasibility of the MED-AUDIT. It was hypothesized that MED-AUDIT would be able to discriminate between the accessibility of four different models of blood pressure monitors, one manual inflate arm monitor, one automatic arm monitor, and two automatic wrist monitors. Results showed that it took about 46-60 minutes to complete the entire evaluate of a blood pressure monitor including unpackaging the device, reading instructions, interacting with the device, and rating the MED-AUDIT taxonomy. It also highlighted that the MED-AUDIT was able to distinguish between different levels of tasks required to use a device and accessibility features included in the device. This demonstrates that this methodology is feasible and will play an important role in further testing and development of the MED-AUDIT.

INTRODUCTION

In the past few decades there has been a dramatic increase in the survival of individuals with severe disabilities and an increased demand for medical devices to be used in patient's homes, so the patient rather than the medical professional is the device user [1, 2, 3, 4, 5]. In addition, this increased survival rate has led to people with disabilities and older adults needing and seeking medical care in larger numbers. However, tremendous disparities in health care access prevent these populations from being treated in a timely manner, primarily due to inaccessible medical technologies [6,7,8]. For example, to monitor their blood pressure, individuals must be able use their upper extremities to get the cuff on themselves and to see or hear the display to use a blood pressure monitor; or individuals must be able to stand to get a mammogram; or must have adequate strength to climb and balance on an examination table. This translates into hundreds of millions of dollars spent by clinical professionals, caregivers, and lay people to purchase medical devices that may not work for a large majority of the patients they are intended for, thereby creating a large disparity in healthcare access [9,10,11].

Three factors exacerbate the problem and highlight the push towards accessible medical devices and informed consumer choice in medical device purchase and use: (1) the rapid aging of America, (2) the increase in the number of PWD living longer due to advances in medicine, and (3) the increase in use of home health equipment used and purchased directly by patients [12,13,14]. However, there is no assessment that measures the accessibility of medical devices or no method to provide information about accessibility to consumers of medical devices. The MED-AUDIT (Medical Equipment Device-Accessibility and Universal Design Information Tool) is being developed to meet this need. The MED-AUDIT is a software-based assessment that performs a task analysis, assesses design features, addresses information for specific impairments, and calculates an integrated accessibility score for medical devices. The questions on task analysis and design features comprise the taxonomy of the MED-AUDIT, which are the components rated to evaluate medical device accessibility. It includes two background matrices that represent relationships between impairments and device features and device features and tasks which create a database map to produce accessibility scores for thirteen different impairments.

Past studies have reported on aspects of the development, usability and psychometric properties of both versions of the MED-AUDIT. Preliminary usability, reliability and validity have been satisfactorily demonstrated for both versions [15], however the ability of the MED-AUDIT to distinguish between accessibility of different medical devices has not been reported. Therefore, the research question explored in this paper is, "Does the MED-AUDIT taxonomy distinguish between the accessibility of four different models of blood pressure monitors"?

METHODS

This feasibility study was designed to determine if the MED-AUDIT taxonomy distinguishes between the accessibility of four different models of blood pressure monitors, when scored by a trained rater (See Figure 1). The four monitors rated in this study were a manual inflate blood pressure monitor, an automatic arm monitor, and two automatic wrist monitors. The monitors were chosen to represent the range of blood pressure monitoring devices available as well as to represent different levels of accessibility.

All four monitors were scored on the same version of the MED-AUDIT, which includes about 1150 distinct questions arranged in a hierarchal outline broken down into five or six levels for the taxonomy. The outline structure provides the branching options from level to level. The branching enables irrelevant parts of the taxonomy for a certain device to be bypassed, making questions targeted and requiring less expertise (See Figure 2). The taxonomy includes 193 questions related to tasks required to use the medical device and are scored on a three-point scale (0-does not require, 1-somewhat requires, and 2-requires). It also includes 957 questions related to features included in the device and are scored on a 3-point scale (0-does not include, 1-somewhat includes, and 2-includes).

The rater unpackaged and interacted with each device prior to scoring it. Each device was scored independently before moving onto the next device. On completion of scoring, the data from the software was exported to an excel sheet which provided a raw score and a percentage score for each question. Data was compiled as tables and graphs. These scores were compared across the four devices to determine if the MED-AUDIT distinguished between the features and tasks of the devices.

RESULTS

The rater took between 45 minutes to an hour to score each of the four devices, including unpackaging the device, reviewing the instructions, interacting with the device and rating the MED-AUDIT for the device. The rater reported no difficulties in using the MED-AUDIT.

The results of the comparison of the four monitors showed that the MED-AUDIT is capable of discriminating between devices. Tasks required were 62% for the manual inflate, 79% for the automatic arm monitor, 75% for the automatic wrist monitor and 66% for the deluxe automatic wrist monitor. Accessibility features included in the device were 63% for the manual inflate monitor, 93% for the automatic arm monitor, 89% for the automatic wrist monitor and 85% for the deluxe automatic wrist monitor.

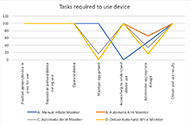

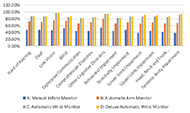

We explored the data further to review differences between scores of specific questions in both sections of the taxonomy. The results demonstrated that the taxonomy was able to distinguish between different monitors across

different questions. For example, as seen in Figure 3, maintaining equipment was rated as a 100% for the manual inflate monitor, 0% for both the automatic arm monitor and the deluxe wrist monitor, and 16% for the automatic wrist monitor. Similarly, for the device features as seen in Figure 4 included in the device, controls such as buttons scored a 100% for the automatic wrist monitor and automatic arm monitor, while the deluxe wrist monitor scored a 62% and the manual monitor scored a 0%. These results match the accessibility features of the devices and highlight that the MED-AUDIT can distinguish between different levels of accessibility for blood pressure monitors.

Last, we examined the differences in scores across the thirteen impairment types. Figure 5 shows the differences between the four monitors across the 13 impairments types. The manual inflate monitor scored the lowest across all impairments followed by the automatic arm monitor. The automatic wrist and deluxe automatic wrist monitors both scored very similarly and higher than the arm monitors.

DISCUSSION

Results from the analysis of the comparison of the four monitors establishes preliminary feasibility of the MED-AUDIT and demonstrates that it can distinguish between accessibility of different models of a medical device both at the individual feature level as well as at the overall task and device feature level. The MED-AUDIT also was able to produce scores for each device for thirteen different impairment types. The scores across the devices also intuitively matched the perceived accessibility of the different types of blood pressure monitors, with the manual arm monitor scoring the lowest in all categories, and the arm monitors scoring lower than the wrist monitors. The entire process of rating a device using the MED-AUDIT also took less than an hour, which is reasonable for rating a medical device.

This study suggests some future steps for continuing to establish the feasibility of the MED-AUDIT: 1) test different types of medical devices that cover the spectrum of diagnostic, monitoring, positioning, and maintenance devices, and 2) test the usefulness of the scores on actual healthcare device use and purchases by people with disabilities and older adults, who are consumers of medical devices.

Establishing the feasibility, reliability and validity of the MED-AUDIT scores will provide insights into how to provide this information to designers as well as consumers of medical devices and may have labeling implications for medical devices. Providing consumers with accessibility information will improve medical device design and healthcare for consumers with disabilities.

REFERENCES

- Gans, B. M., Mann, N. R., & Becker, B. E. (1993). Delivery of primary care to the physically challenged. Archives of Physical Medicine and Rehabilitation, 74(supplement), s15-s19.

- Kaye, H. S., Laplante, M. P., Carlson, D., & Wegner, B. L. (1996). Trends in disability rates in the United States, 1970-1994. Disability Statistics Abstract, 17,1-6.

- Wilcox, S. B. (2003). Applying the principles of universal design to medical devices. MDDIOnline. Retrieved October 11, 2004, from https://www.mddionline.com/news/applying-universal-design-medical-devices.

- Story, M.F., Winters, J.M., Kailes, J.I., Premo, B., Winters, J.M. (2003). Understanding Barriers to Healthcare Caused by Inaccessible Medical Instrumentation. Proc. RESNA 2003 Annual Conf, June18, 2003.

- Winters, J.M., Story, M.F., Kailes, J.I., Premo, B., Danturthi, S., Winters, J. (2004). Accessibility of Medical Instrumentation for Persons with Disabilities: A National Survey. Midwest Nursing Reseach Society , St. Louis, MO, February 27-March 1, 2004.

- Cheng, E., Myers, L., Wolf, S., Shatin, D., Cui, X., Ellison, G., Belin, T., & Vickrey, B. (2001). Mobility impairments and use of preventive services in women with multiple sclerosis: observational studies. British Medical Journal, 323(7319), 968–969. https://doi.org/10.1136/bmj.323.7319.968

- Schopp, L., Sanford, T., Hagglund, K., Gay, J., & Coatney, M. (2002). Removing service barriers for women with physical disabilities: Promoting accessibility in the gynecologic care setting. Journal of Midwifery & Women's Health, 47(2), 74–79. https://doi.org/10.1016/S1526-9523(02)00216-7

- Veltman, A., Stewart, D., Tardif, G., & Branigan, M. (2001). Perceptions of primary healthcare services among people with physical disabilities. Part 1: access issues. MedGenMed : Medscape General Medicine, 3(2), 18

- Grabois, E., Nosek, M. A., & Rossi, D. (1999). Accessibility of primary care physicians' offices for people with disabilities. Archives of Family Medicine, 8(1), 44–51. https://doi.org/10.1001/archfami.8.1.44

- North Carolina Office on Disability and Health (NCODH). (2007). Removing barriers to health care: A guide for health professionals.

- Markwalder, A. (2005). Disability rights advocates. In A call to action: A guide for managed care plans serving Californians with disabilities.

- Gans, B. M., Mann, N. R., & Becker, B. E. (1993). Delivery of primary care to the physically challenged. Archives of Physical Medicine and Rehabilitation, 74(Suppl), s15–s19.

- Kraus, L., Lauer, E., Coleman, R., & Houtenville, A. (n.d.). 2017 Disability Statistics Annual Report.

- R Sade, R. M. (2012). The graying of America: Challenges and Controversies. The Journal of Law, Medicine, & Ethics, 40(1), 6–9. https://doi.org/https://doi.org/10.1111/j.1748-720X.2012.00639.x

- Mendonca, R., & Smith, R. O. (2007). Validity analysis: MED-AUDIT (Medical Equipment Device-Accessibility and Universal Design Information Tool). RESNA 30th International Conference on Technology and Disability: Research, Design, Practice and Policy.